Contents

- Kaspersky Next XDR Expert Help

- What's new

- About Kaspersky Next XDR Expert

- Architecture of Kaspersky Next XDR Expert

- OSMP Console interface

- Licensing

- About data provision

- Quick start guide

- Deployment of Kaspersky Next XDR Expert

- Hardening Guide

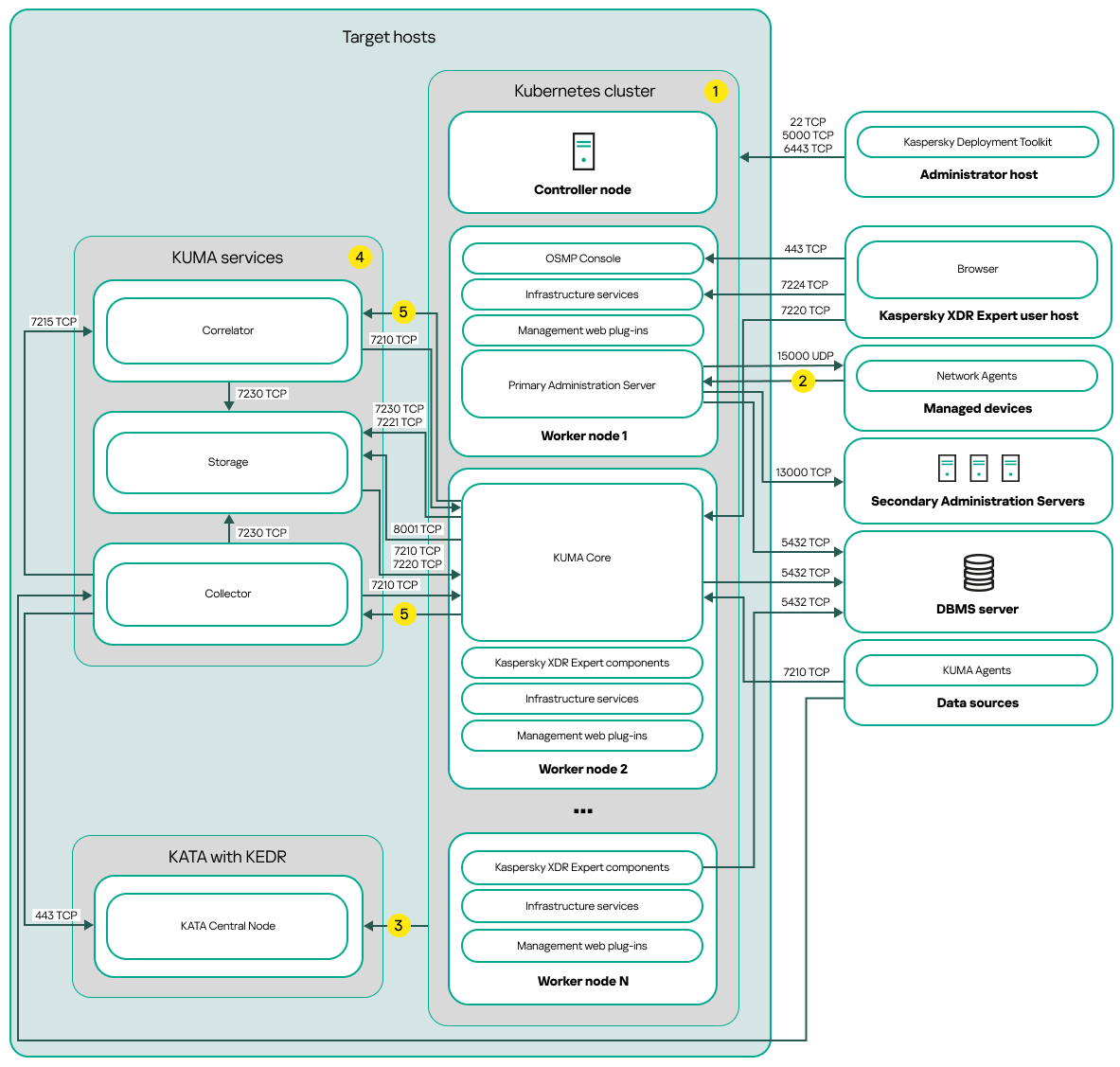

- Deployment scheme: Distributed deployment

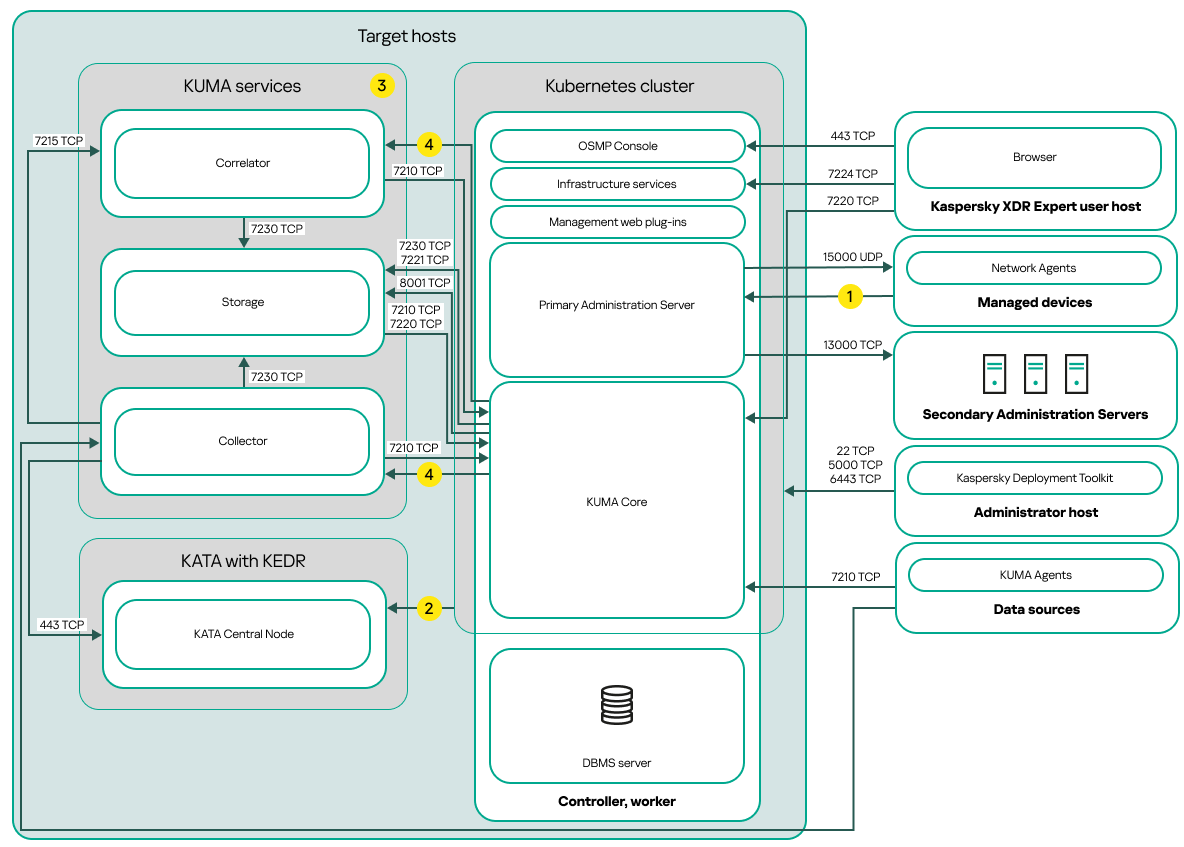

- Deployment scheme: Single node deployment

- Ports used by Kaspersky Next XDR Expert

- Preparation work and deployment

- Distributed deployment: Preparing the administrator and target hosts

- Single node deployment: Preparing the administrator and target hosts

- Preparing the hosts for installation of the KUMA services

- Installing a database management system

- Configuring the PostgreSQL or Postgres Pro server for working with Open Single Management Platform

- Preparing the KUMA inventory file

- Distributed deployment: Specifying the installation parameters

- Single node deployment: Specifying the installation parameters

- Specifying the installation parameters by using the Configuration wizard

- Installing Kaspersky Next XDR Expert

- Configuring internet access for the target hosts

- Synchronizing time on machines

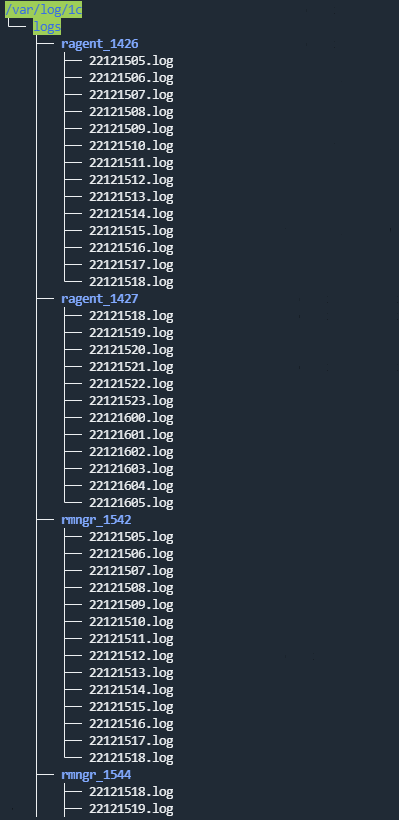

- Installing KUMA services

- Deployment of multiple Kubernetes clusters and Kaspersky Next XDR Expert instances

- Signing in to Kaspersky Next XDR Expert

- Kaspersky Next XDR Expert maintenance

- Updating Kaspersky Next XDR Expert components

- Versioning the configuration file

- Removing Kaspersky Next XDR Expert components and management web plug-ins

- Reinstalling Kaspersky Next XDR Expert after a failed installation

- Stopping the Kubernetes cluster nodes

- Using certificates for public Kaspersky Next XDR Expert services

- Modifying the self-signed KUMA Console certificate

- Calculation and changing of disk space for storing Administration Server data

- Rotation of secrets

- Adding hosts for installing the additional KUMA services

- Replacing a host that uses KUMA storage

- Migration to Kaspersky Next XDR Expert

- Integration with other solutions

- Threat detection

- Working with alerts

- About alerts

- Alert data model

- Viewing the alert table

- Viewing alert details

- Assigning alerts to analysts

- Changing an alert status

- Creating alerts manually

- Linking alerts to incidents

- Unlinking alerts from incidents

- Linking events to alerts

- Unlinking events from alerts

- Working with alerts on the investigation graph

- Working with incidents

- About incidents

- Incident data model

- Creating incidents

- Viewing the incident table

- Viewing incident details

- Assigning incidents to analysts

- Changing an incident status

- Changing an incident priority

- Merging incidents

- Editing incidents by using playbooks

- Investigation graph

- Segmentation rules

- Copying segmentation rules to another tenant

- Working with alerts

- Threat hunting

- Threat response

- Response actions

- Terminating processes

- Moving devices to another administration group

- Running a malware scan

- Viewing the result of the malware scan

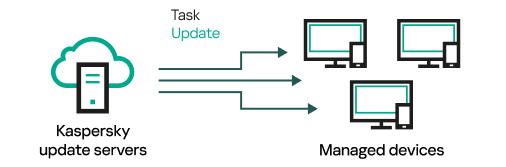

- Updating databases

- Moving files to quarantine

- Changing authorization status of devices

- Viewing information about KASAP users and changing learning groups

- Responding through Active Directory

- Responding through KATA/KEDR

- Responding through UserGate

- Responding through Ideco NGFW

- Responding through Ideco UTM

- Responding through Redmine

- Responding through Check Point NGFW

- Responding through Sophos Firewall

- Responding through Continent 4

- Responding through SKDPU NT

- Viewing response history from alert or incident details

- Playbooks

- Viewing the playbooks table

- Creating playbooks

- Editing playbooks

- Customizing playbooks

- Viewing playbook properties

- Terminating playbooks

- Deleting playbooks

- Launching playbooks and response actions

- Configuring manual approval of response actions

- Approving playbooks or response actions

- Enrichment from playbook

- Viewing response history

- Predefined playbooks

- Playbook trigger

- Playbook algorithm

- Response actions

- REST API

- Creating a token

- Authorizing API requests

- API operations

- Viewing a list of alerts

- Viewing a list of incidents

- Viewing a list of tenants

- Closing alerts

- Closing incidents

- Viewing a list of active lists on the correlator

- Importing entries to an active list

- Searching assets

- Importing assets

- Deleting assets

- Searching events

- Viewing information about the cluster

- Resource search

- Loading resource file

- Viewing the contents of a resource file

- Importing resources

- Exporting resources

- Downloading the resource file

- Searching services

- Viewing token bearer information

- Dictionary updating in services

- Dictionary retrieval

- Viewing custom fields of the assets

- Viewing the list of context tables in the correlator

- Importing records into a context table

- Exporting records from a context table

- Viewing a list of aggregation rules

- Creating an aggregation rule

- Replacing aggregation rules

- Managing Kaspersky Unified Monitoring and Analysis Platform

- About Kaspersky Unified Monitoring and Analysis Platform

- What's new

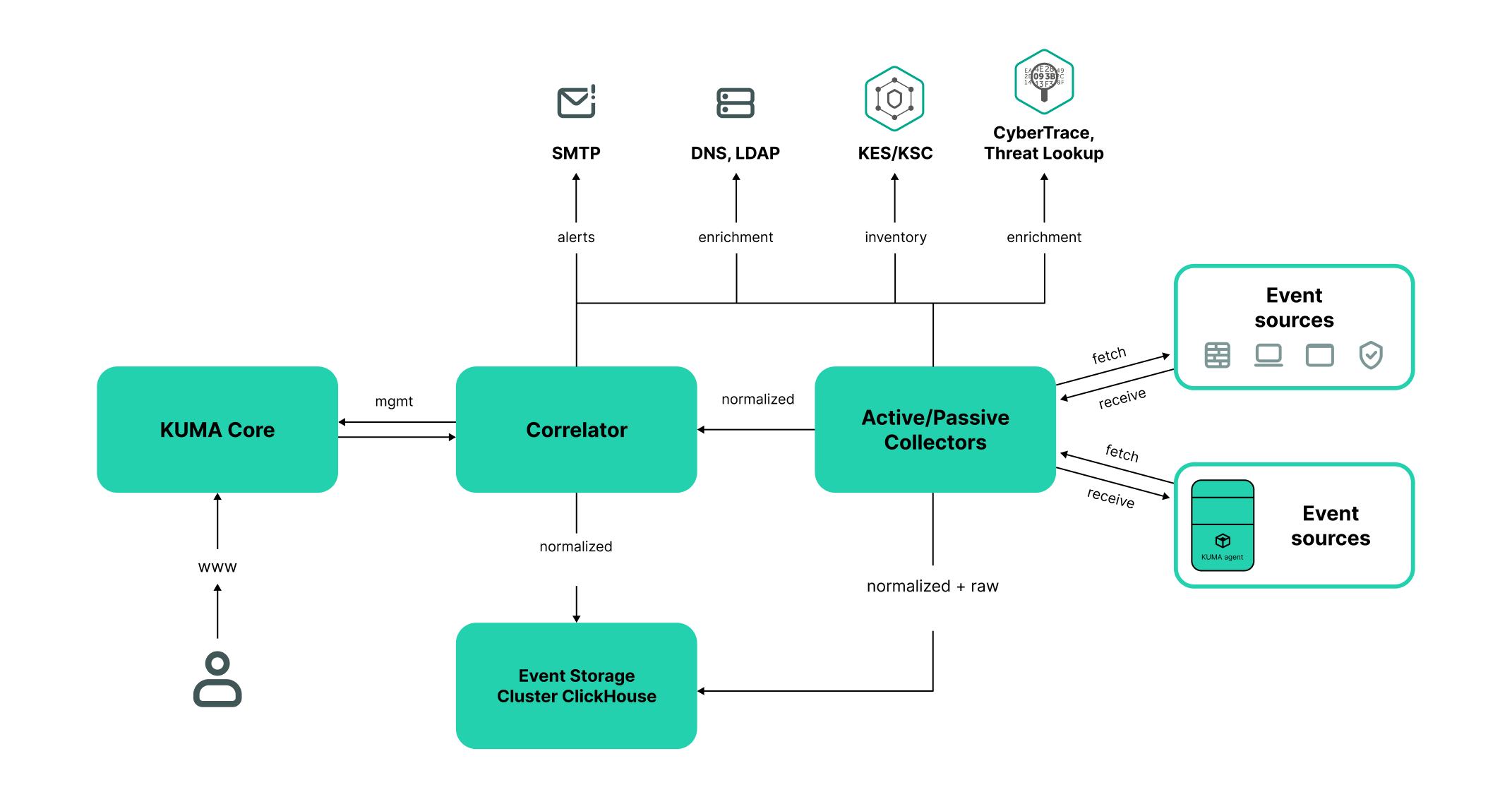

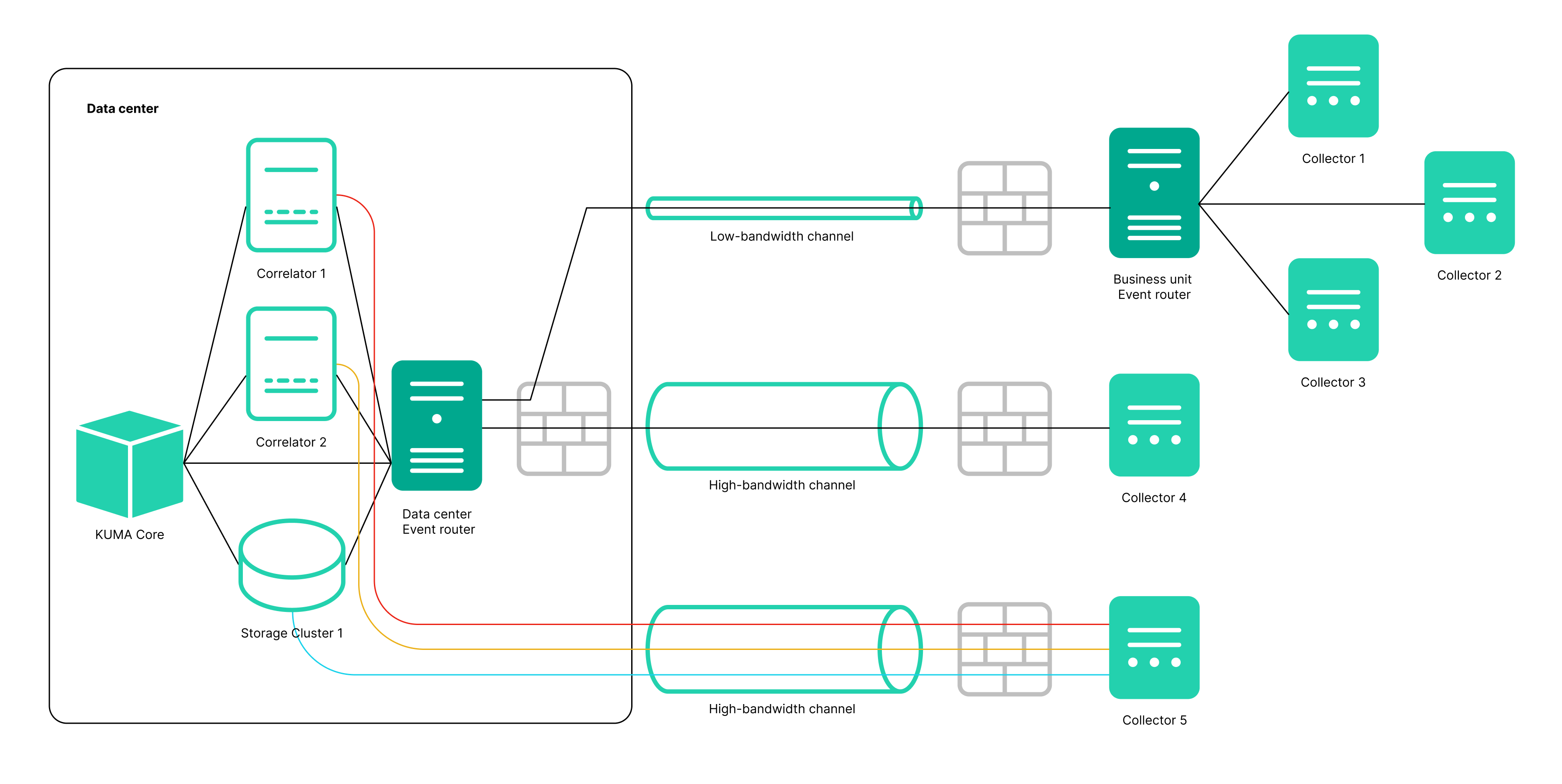

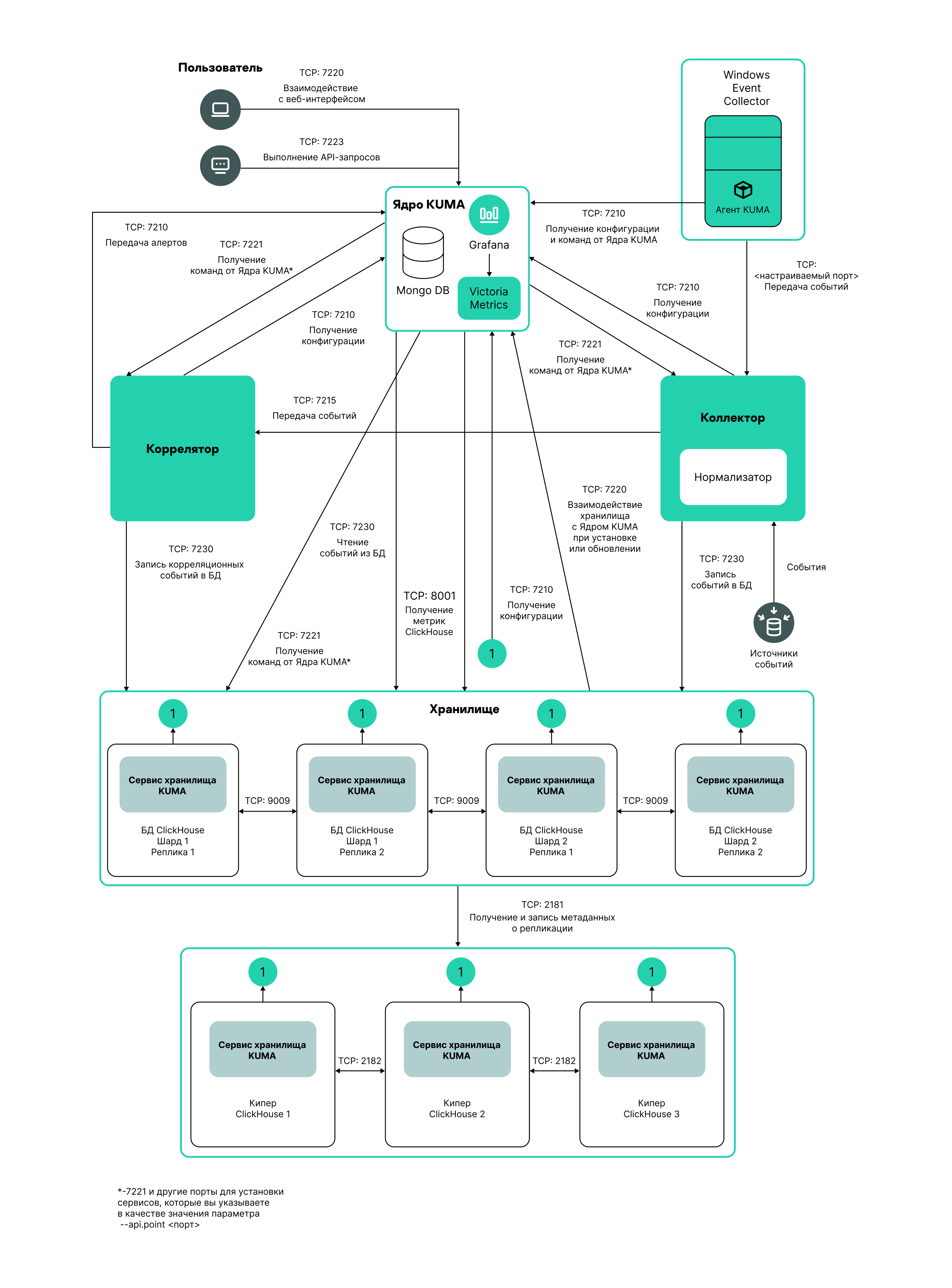

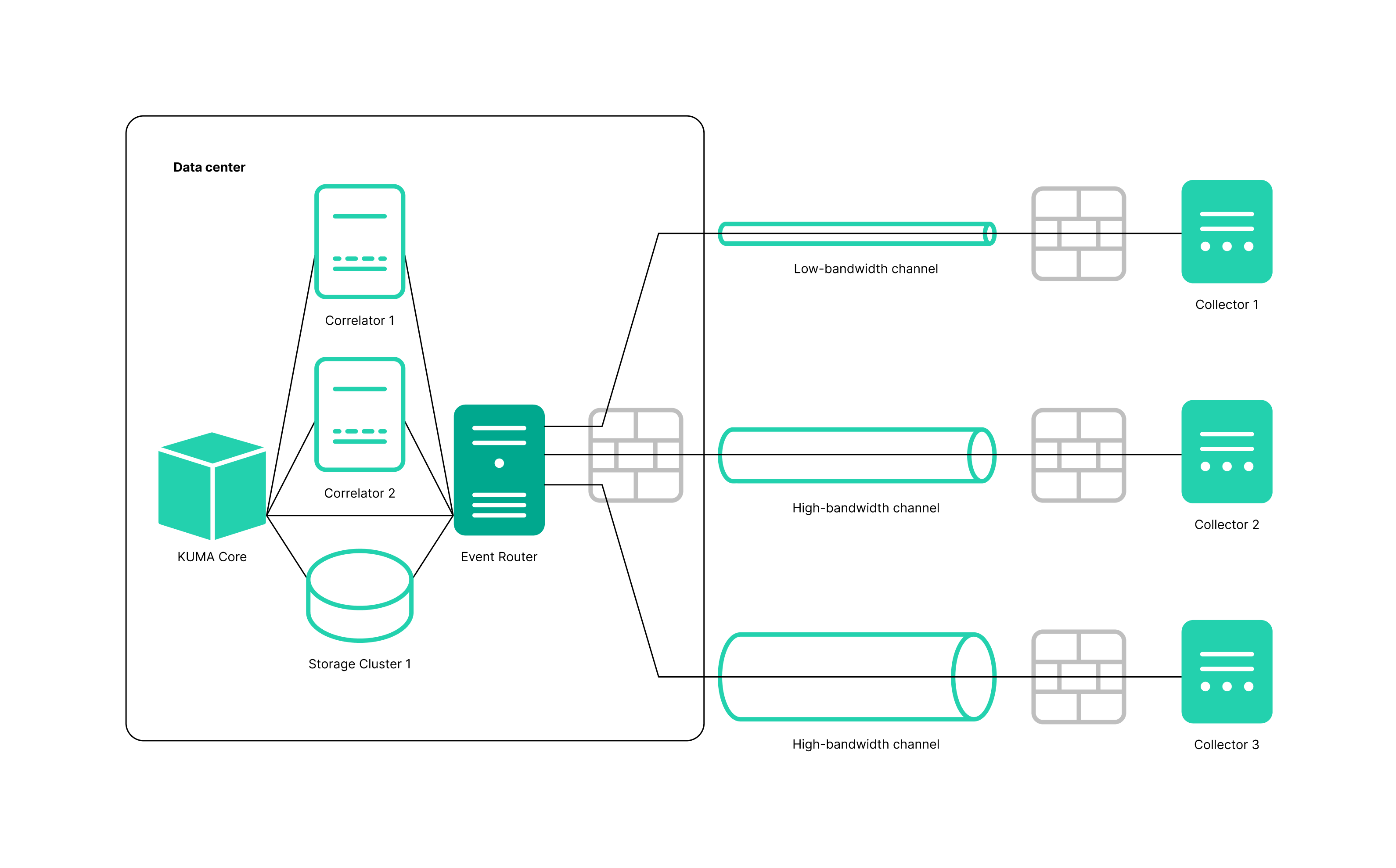

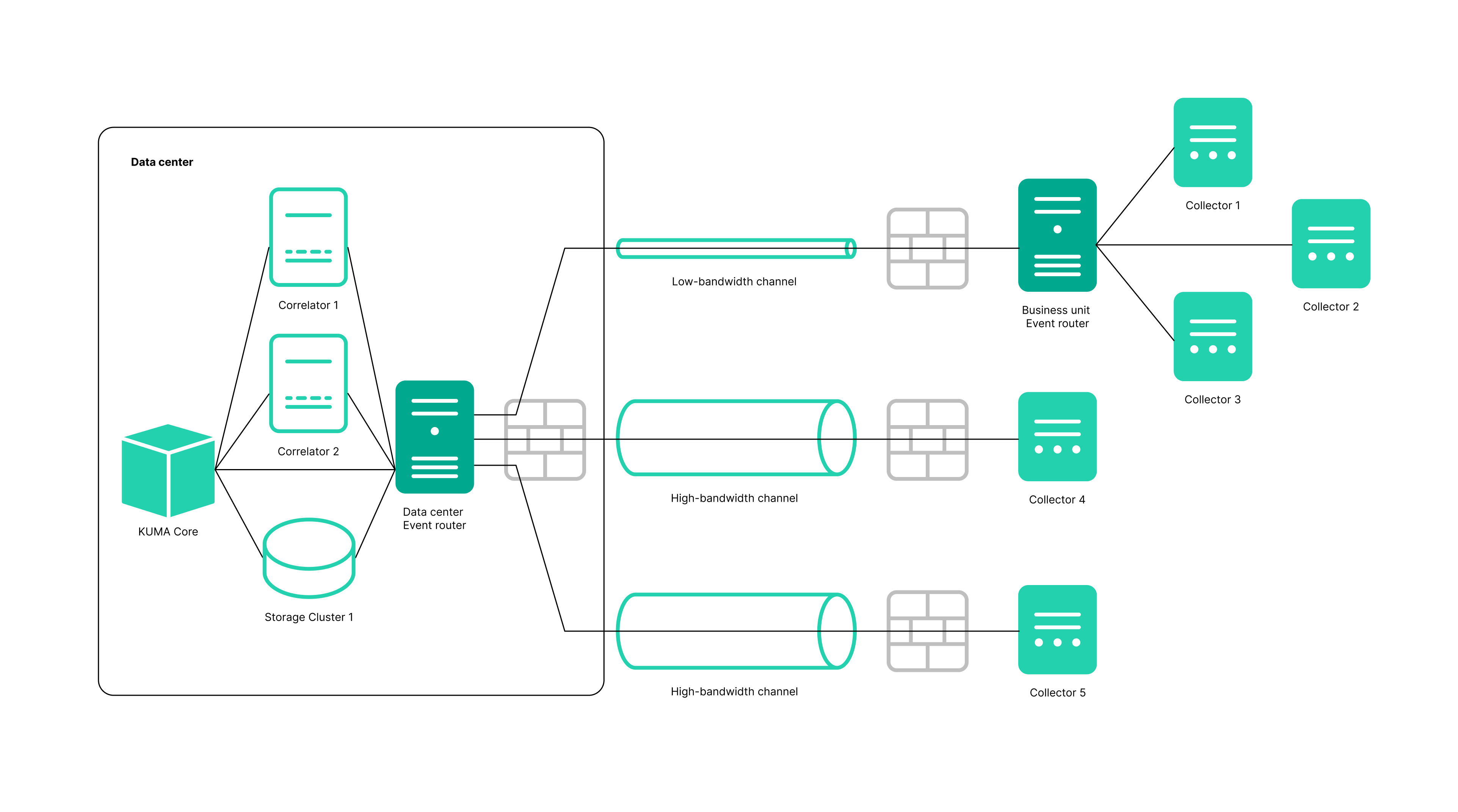

- Program architecture

- Administrator's guide

- Logging in to the KUMA Console

- KUMA services

- Services tools

- Service resource sets

- Creating a storage

- Creating a correlator

- Creating an event router

- Creating a collector

- Creating an agent

- Configuring event sources

- Configuring receipt of Auditd events

- Configuring receipt of KATA/EDR events

- Configuring receiving Kaspersky Security Center event from MS SQL

- Creating an account in the MS SQL database

- Configuring the SQL Server Browser service

- Creating a secret in KUMA

- Configuring a connector

- Configuring the KUMA Collector for receiving Kaspersky Security Center events from an MS SQL database

- Installing the KUMA Collector for receiving Kaspersky Security Center events from the MS SQL database

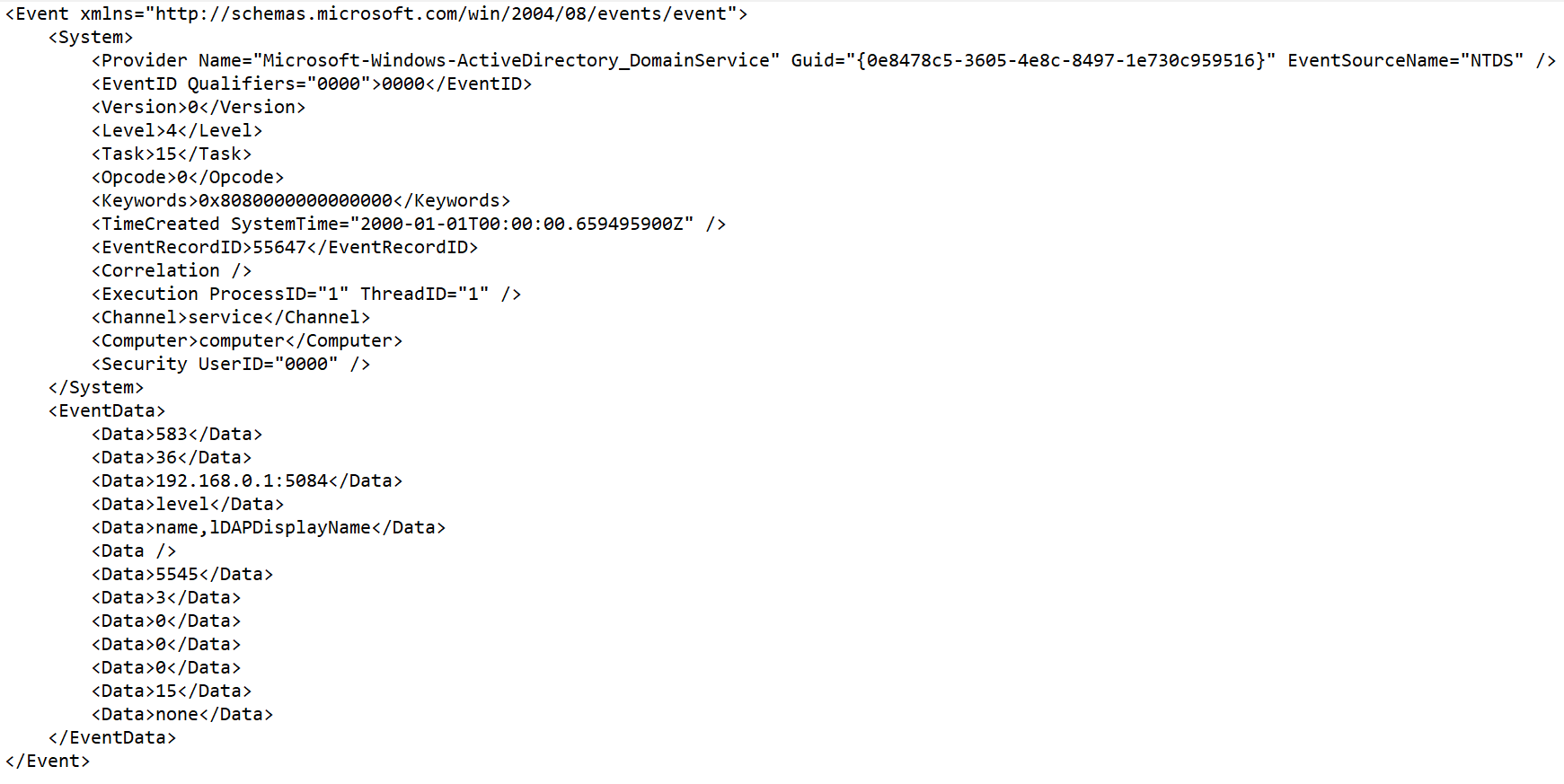

- Configuring receipt of events from Windows devices using KUMA Agent (WEC)

- Configuring audit of events from Windows devices

- Configuring centralized receipt of events from Windows devices using the Windows Event Collector service

- Granting permissions to view Windows events

- Granting permissions to log on as a service

- Configuring the KUMA Collector for receiving events from Windows devices

- Installing the KUMA Collector for receiving events from Windows devices

- Configuring forwarding of events from Windows devices to KUMA using KUMA Agent (WEC)

- Configuring receipt of events from Windows devices using KUMA Agent (WMI)

- Configuring receipt of PostgreSQL events

- Configuring receipt of IVK Kolchuga-K events

- Configuring receipt of CryptoPro NGate events

- Configuring receipt of Ideco UTM events

- Configuring receipt of KWTS events

- Configuring receipt of KLMS events

- Configuring receipt of KSMG events

- Configuring receipt of PT NAD events

- Configuring receipt of events using the MariaDB Audit Plugin

- Configuring receipt of Apache Cassandra events

- Configuring receipt of FreeIPA events

- Configuring receipt of VipNet TIAS events

- Configuring receipt of Nextcloud events

- Configuring receipt of Snort events

- Configuring receipt of Suricata events

- Configuring receipt of FreeRADIUS events

- Configuring receipt of VMware vCenter events

- Configuring receipt of zVirt events

- Configuring receipt of Zeek IDS events

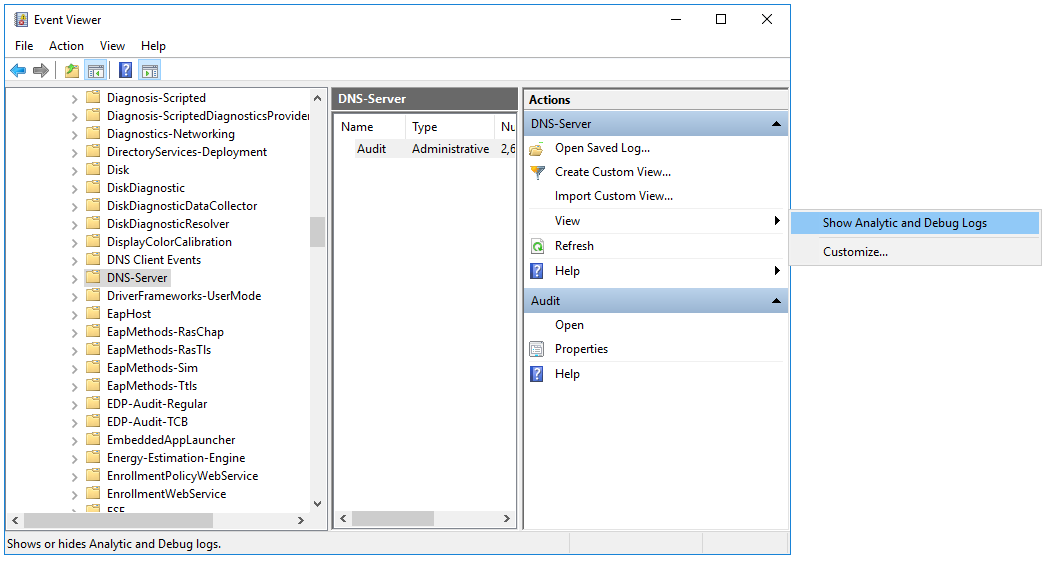

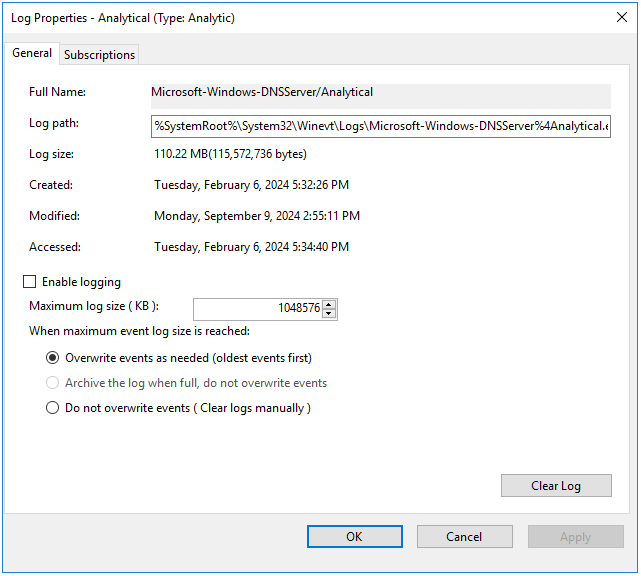

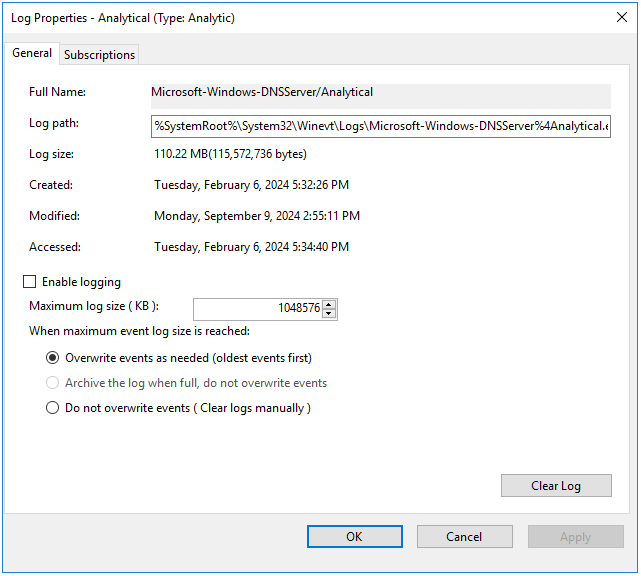

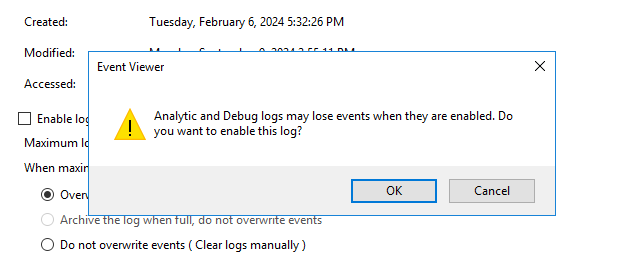

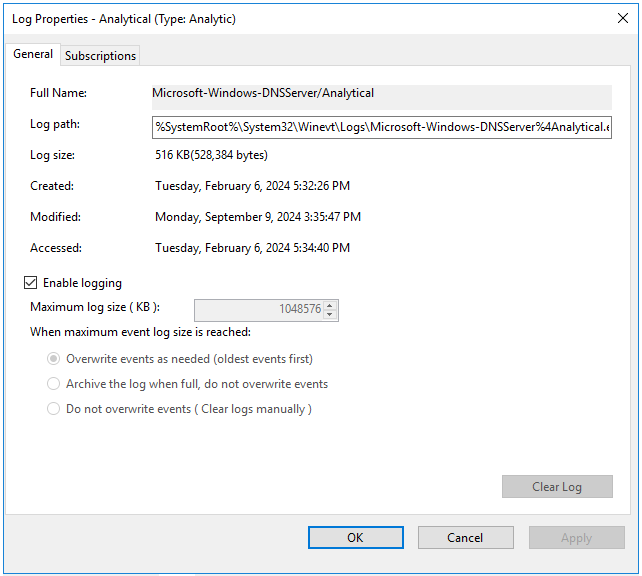

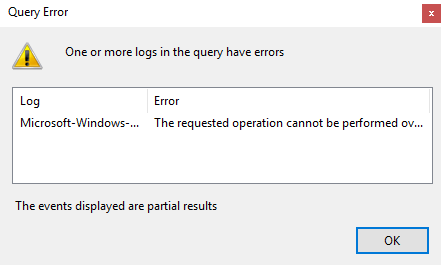

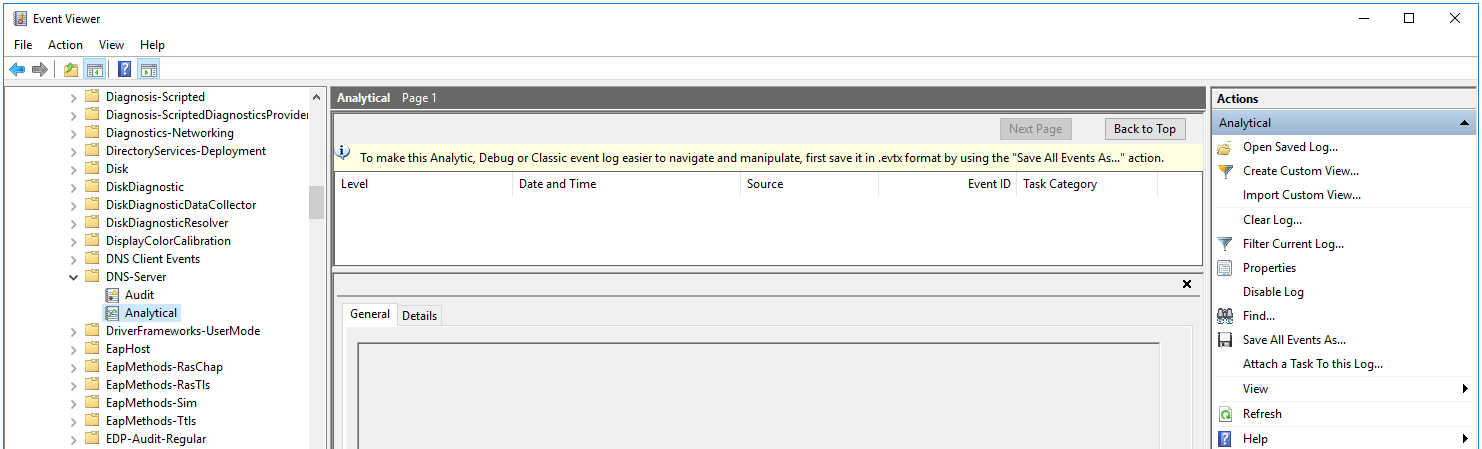

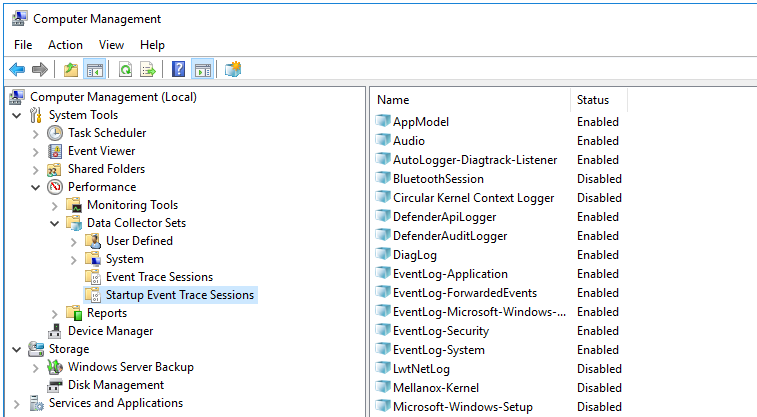

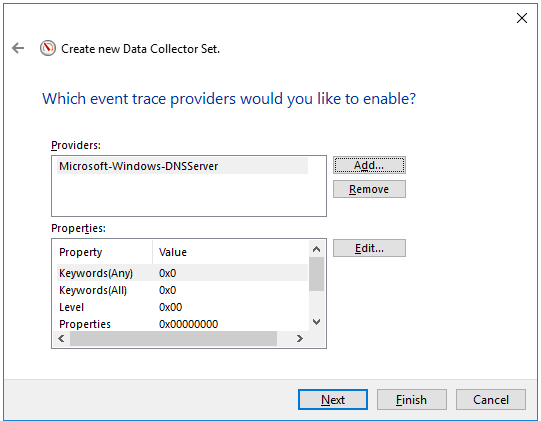

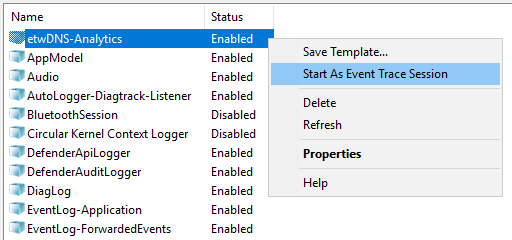

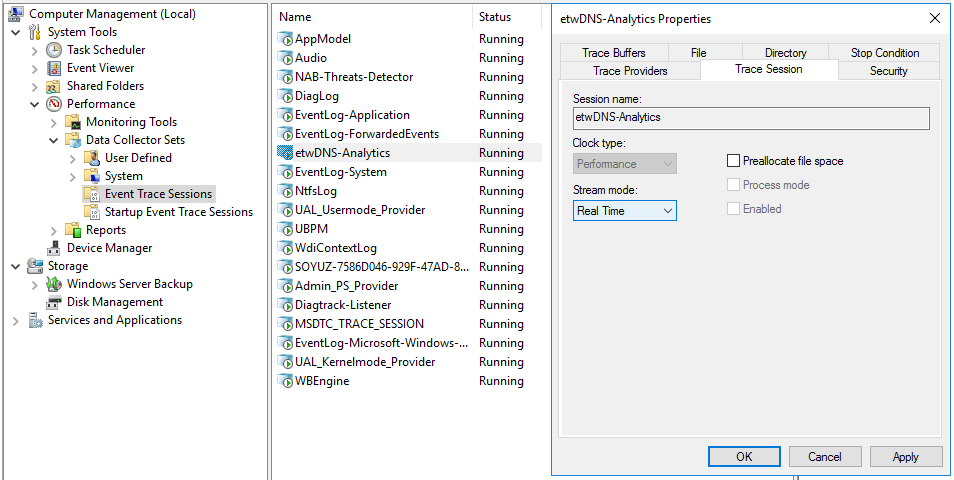

- Configuring DNS server event reception using the ETW connector

- Monitoring event sources

- Managing assets

- Adding an asset category

- Configuring the table of assets

- Searching assets

- Exporting asset data

- Viewing asset details

- Adding assets

- Adding asset information in the KUMA Console

- Importing asset information from Kaspersky Security Center

- Importing asset information from MaxPatrol

- Importing asset information from KICS for Networks

- Examples of asset field comparison during import

- Settings of the kuma-ptvm-config.yaml configuration file

- Assigning a category to an asset

- Editing the parameters of assets

- Archiving assets

- Deleting assets

- Updating third-party applications and fixing vulnerabilities on Kaspersky Security Center assets

- Moving assets to a selected administration group

- Asset audit

- Custom asset fields

- Critical information infrastructure assets

- Integration with other solutions

- Integration with Kaspersky Security Center

- Kaspersky Endpoint Detection and Response integration

- Integration with Kaspersky CyberTrace

- Integration with Kaspersky Threat Intelligence Portal

- Connecting over LDAP

- Enabling and disabling LDAP integration

- Adding a tenant to the LDAP server integration list

- Creating an LDAP server connection

- Creating a copy of an LDAP server connection

- Changing an LDAP server connection

- Changing the data update frequency

- Changing the data storage period

- Starting account data update tasks

- Deleting an LDAP server connection

- Kaspersky Industrial CyberSecurity for Networks integration

- Integration with Neurodat SIEM IM

- Kaspersky Automated Security Awareness Platform

- Sending notifications to Telegram

- UserGate integration

- Integration with Kaspersky Web Traffic Security

- Integration with Kaspersky Secure Mail Gateway

- Importing asset information from RedCheck

- Configuring receipt of Sendmail events

- Managing KUMA

- Working with geographic data

- User guide

- KUMA resources

- Operations with resources

- Destinations

- Normalizers

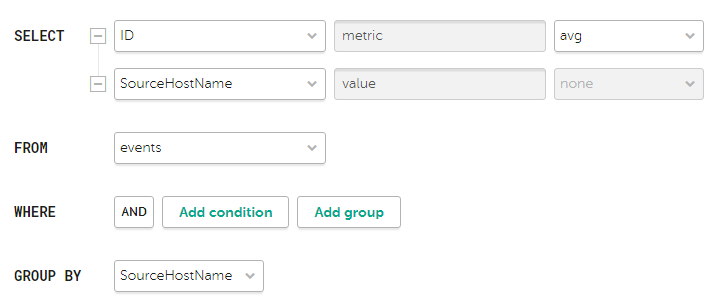

- Aggregation rules

- Enrichment rules

- Correlation rules

- Filters

- Active lists

- Viewing the table of active lists

- Adding active list

- Viewing the settings of an active list

- Changing the settings of an active list

- Duplicating the settings of an active list

- Deleting an active list

- Viewing records in the active list

- Searching for records in the active list

- Adding a record to an active list

- Duplicating records in the active list

- Changing a record in the active list

- Deleting records from the active list

- Import data to an active list

- Exporting data from the active list

- Predefined active lists

- Dictionaries

- Response rules

- Connectors

- Viewing connector settings

- Adding a connector

- Connector settings

- Secrets

- Context tables

- Viewing the list of context tables

- Adding a context table

- Viewing context table settings

- Editing context table settings

- Duplicating context table settings

- Deleting a context table

- Viewing context table records

- Searching context table records

- Adding a context table record

- Editing a context table record

- Deleting a context table record

- Importing data into a context table

- Analytics

- KUMA resources

- Working with Open Single Management Platform

- Basic concepts

- Administration Server

- Hierarchy of Administration Servers

- Virtual Administration Server

- Web Server

- Network Agent

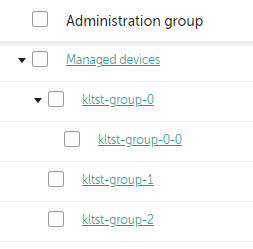

- Administration groups

- Managed device

- Unassigned device

- Administrator's workstation

- Management web plug-in

- Policies

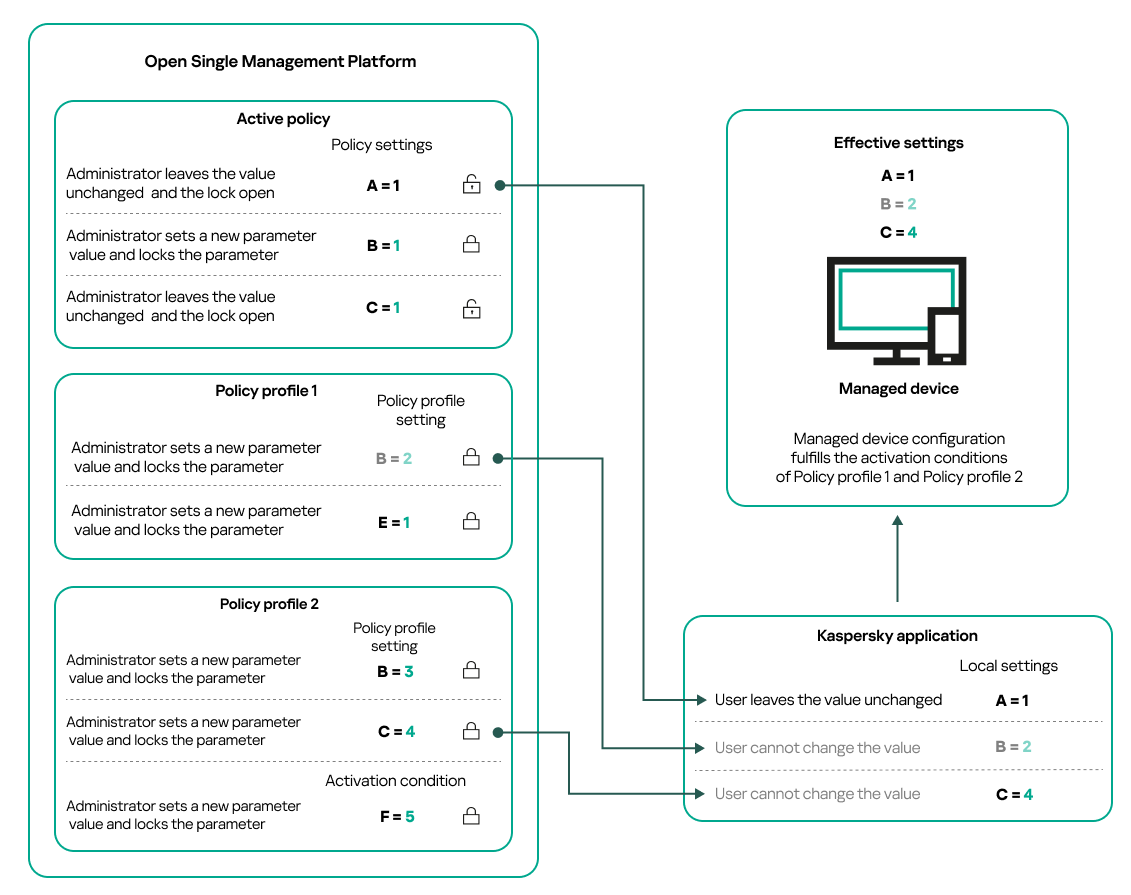

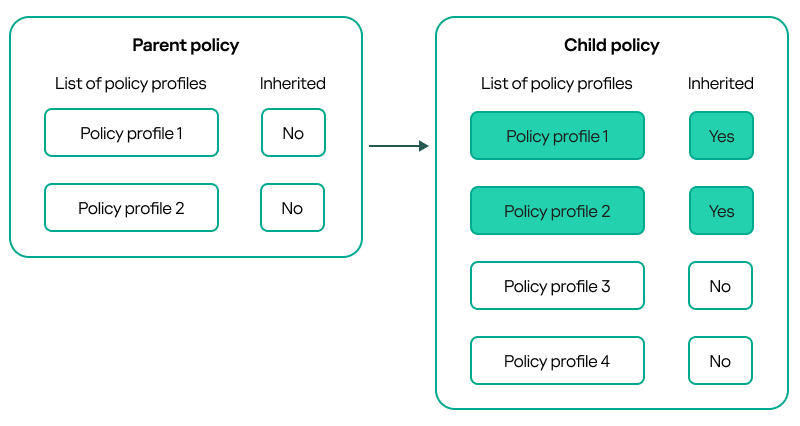

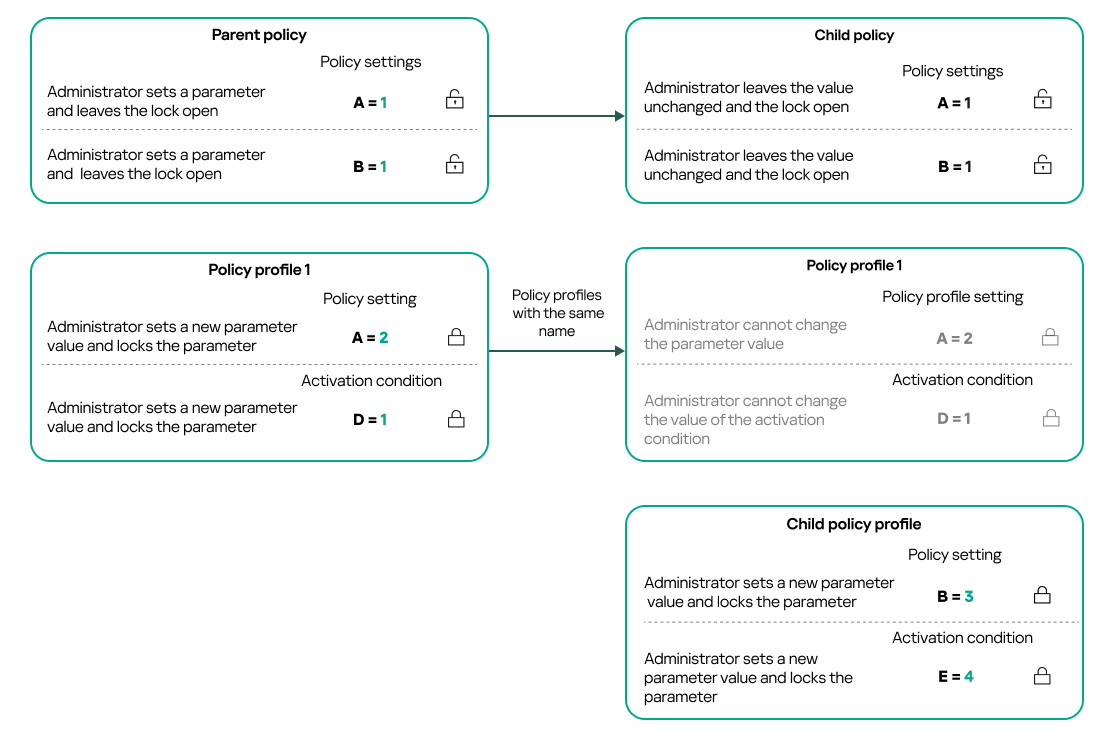

- Policy profiles

- Tasks

- Task scope

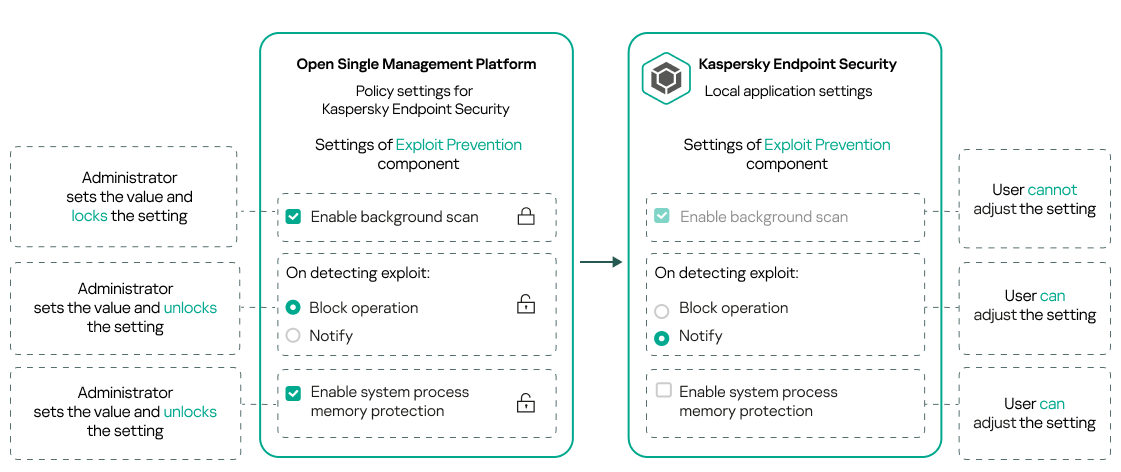

- How local application settings relate to policies

- Distribution point

- Connection gateway

- Configuring Administration Server

- Configuring the connection of OSMP Console to Administration Server

- Configuring internet access settings

- Certificates for work with Open Single Management Platform

- About Open Single Management Platform certificates

- Requirements for custom certificates used in Open Single Management Platform

- Reissuing the certificate for OSMP Console

- Replacing certificate for OSMP Console

- Converting a PFX certificate to the PEM format

- Scenario: Specifying the custom Administration Server certificate

- Replacing the Administration Server certificate by using the klsetsrvcert utility

- Connecting Network Agents to Administration Server by using the klmover utility

- Hierarchy of Administration Servers

- Creating a hierarchy of Administration Servers: adding a secondary Administration Server

- Viewing the list of secondary Administration Servers

- Managing virtual Administration Servers

- Configuring Administration Server connection events logging

- Setting the maximum number of events in the event repository

- Changing DBMS credentials

- Backup copying and restoration of the Administration Server data

- Deleting a hierarchy of Administration Servers

- Access to public DNS servers

- Configuring the interface

- Encrypt communication with TLS

- Discovering networked devices

- Managing client devices

- Settings of a managed device

- Creating administration groups

- Device moving rules

- Adding devices to an administration group manually

- Moving devices or clusters to an administration group manually

- About clusters and server arrays

- Properties of a cluster or server array

- Adjustment of distribution points and connection gateways

- Standard configuration of distribution points: Single office

- Standard configuration of distribution points: Multiple small remote offices

- Calculating the number and configuration of distribution points

- Assigning distribution points automatically

- Assigning distribution points manually

- Modifying the list of distribution points for an administration group

- Enabling a push server

- About device statuses

- Configuring the switching of device statuses

- Device selections

- Device tags

- Device tags

- Creating a device tag

- Renaming a device tag

- Deleting a device tag

- Viewing devices to which a tag is assigned

- Viewing tags assigned to a device

- Tagging a device manually

- Removing an assigned tag from a device

- Viewing rules for tagging devices automatically

- Editing a rule for tagging devices automatically

- Creating a rule for tagging devices automatically

- Running rules for auto-tagging devices

- Deleting a rule for tagging devices automatically

- Data encryption and protection

- Changing the Administration Server for client devices

- Viewing and configuring the actions when devices show inactivity

- Deploying Kaspersky applications

- Scenario: Kaspersky applications deployment

- Protection deployment wizard

- Starting Protection deployment wizard

- Step 1. Selecting the installation package

- Step 2. Selecting a method for distribution of key file or activation code

- Step 3. Selecting Network Agent version

- Step 4. Selecting devices

- Step 5. Specifying the remote installation task settings

- Step 6. Removing incompatible applications before installation

- Step 7. Moving devices to Managed devices

- Step 8. Selecting accounts to access devices

- Step 9. Starting installation

- Adding management plug-ins for Kaspersky applications

- Viewing the list of components integrated in Open Single Management Platform

- Viewing names, parameters, and custom actions of Kaspersky Next XDR Expert components

- Downloading and creating installation packages for Kaspersky applications

- Creating installation packages from a file

- Creating stand-alone installation packages

- Changing the limit on the size of custom installation package data

- Installing Network Agent for Linux in silent mode (with an answer file)

- Preparing a device running Astra Linux in the closed software environment mode for installation of Network Agent

- Viewing the list of stand-alone installation packages

- Distributing installation packages to secondary Administration Servers

- Preparing a Linux device and installing Network Agent on a Linux device remotely

- Installing applications using a remote installation task

- Specifying settings for remote installation on Unix devices

- Starting and stopping Kaspersky applications

- Replacing third-party security applications

- Removing applications or software updates remotely

- Preparing a device running SUSE Linux Enterprise Server 15 for installation of Network Agent

- Preparing a Windows device for remote installation. Riprep utility

- Configuring Kaspersky applications

- Scenario: Configuring network protection

- About device-centric and user-centric security management approaches

- Policy setup and propagation: Device-centric approach

- Policy setup and propagation: User-centric approach

- Policies and policy profiles

- Network Agent policy settings

- Usage of Network Agent for Windows, Linux, and macOS: Comparison

- Comparison of Network Agent settings by operating systems

- Manual setup of the Kaspersky Endpoint Security policy

- Configuring Kaspersky Security Network

- Checking the list of the networks protected by Firewall

- Disabling the scan of network devices

- Excluding software details from the Administration Server memory

- Configuring access to the Kaspersky Endpoint Security for Windows interface on workstations

- Saving important policy events in the Administration Server database

- Manual setup of the group update task for Kaspersky Endpoint Security

- Kaspersky Security Network (KSN)

- Managing tasks

- About tasks

- About task scope

- Creating a task

- Starting a task manually

- Starting a task for selected devices

- Viewing the task list

- General task settings

- Exporting a task

- Importing a task

- Starting the Change tasks password wizard

- Viewing task run results stored on the Administration Server

- Manual setup of the group task for scanning a device with Kaspersky Endpoint Security

- General task settings

- Application tags

- Granting offline access to the external device blocked by Device Control

- Registering Kaspersky Industrial CyberSecurity for Networks application in OSMP Console

- Managing users and user roles

- About user accounts

- About user roles

- Configuring access rights to application features. Role-based access control

- Adding an account of an internal user

- Creating a security group

- Editing an account of an internal user

- Editing a security group

- Assigning a role to a user or a security group

- Adding user accounts to an internal security group

- Assigning a user as a device owner

- Two-step verification

- Scenario: Configuring two-step verification for all users

- About two-step verification for an account

- Enabling two-step verification for your own account

- Enabling required two-step verification for all users

- Disabling two-step verification for a user account

- Disabling required two-step verification for all users

- Excluding accounts from two-step verification

- Configuring two-step verification for your own account

- Prohibit new users from setting up two-step verification for themselves

- Generating a new secret key

- Editing the name of a security code issuer

- Changing the number of allowed password entry attempts

- Deleting a user or a security group

- Creating a user role

- Editing a user role

- Editing the scope of a user role

- Deleting a user role

- Associating policy profiles with roles

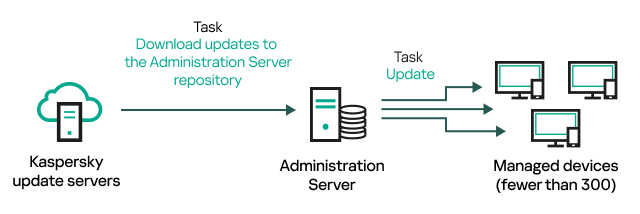

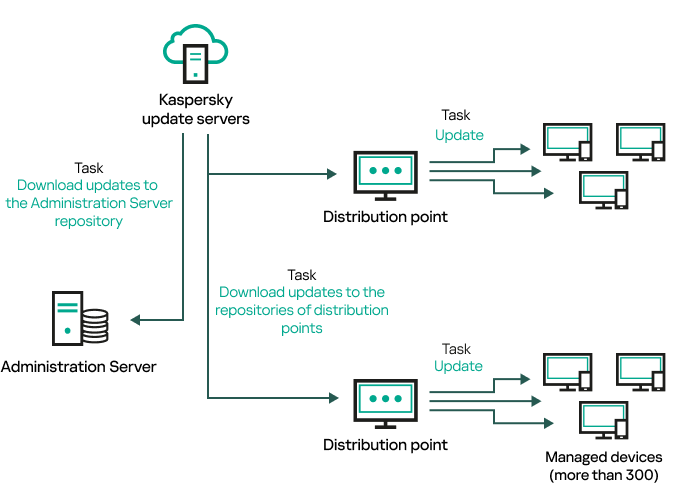

- Updating Kaspersky databases and applications

- Scenario: Regular updating Kaspersky databases and applications

- About updating Kaspersky databases, software modules, and applications

- Creating the Download updates to the Administration Server repository task

- Viewing downloaded updates

- Verifying downloaded updates

- Creating the task for downloading updates to the repositories of distribution points

- Adding sources of updates for the Download updates to the Administration Server repository task

- About using diff files for updating Kaspersky databases and software modules

- Enabling the Downloading diff files feature

- Downloading updates by distribution points

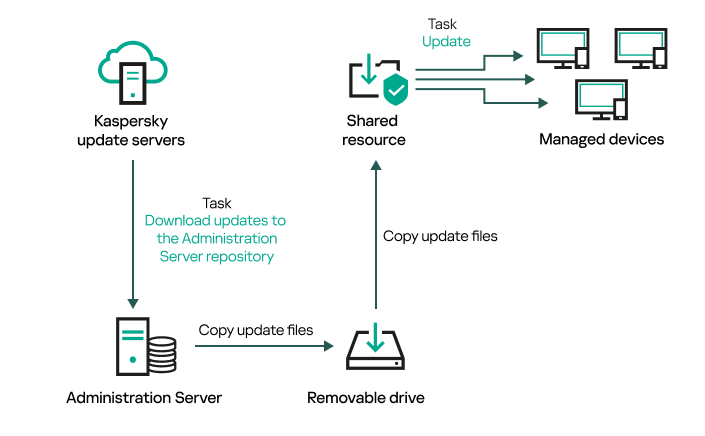

- Updating Kaspersky databases and software modules on offline devices

- Remote diagnostics of client devices

- Opening the remote diagnostics window

- Enabling and disabling tracing for applications

- Downloading trace files of an application

- Deleting trace files

- Downloading application settings

- Downloading system information from a client device

- Downloading event logs

- Starting, stopping, restarting the application

- Running the remote diagnostics of Kaspersky Security Center Network Agent and downloading the results

- Running an application on a client device

- Generating a dump file for an application

- Running remote diagnostics on a Linux-based client device

- Managing applications and executable files on client devices

- Using Application Control to manage executable files

- Application Control modes and categories

- Obtaining and viewing a list of applications installed on client devices

- Obtaining and viewing a list of executable files stored on client devices

- Creating an application category with content added manually

- Creating an application category that includes executable files from selected devices

- Creating an application category that includes executable files from selected folder

- Viewing the list of application categories

- Configuring Application Control in the Kaspersky Endpoint Security for Windows policy

- Adding event-related executable files to the application category

- About the license

- API Reference Guide

- Basic concepts

- Monitoring, reporting, and audit

- Scenario: Monitoring and reporting

- About types of monitoring and reporting

- Triggering of rules in Smart Training mode

- Dashboard and widgets

- Reports

- Events and event selections

- About events in Open Single Management Platform

- Events of Open Single Management Platform components

- Using event selections

- Creating an event selection

- Editing an event selection

- Viewing a list of an event selection

- Exporting an event selection

- Importing an event selection

- Viewing details of an event

- Exporting events to a file

- Viewing an object history from an event

- Deleting events

- Deleting event selections

- Setting the storage term for an event

- Blocking frequent events

- Event processing and storage on the Administration Server

- Notifications and device statuses

- Kaspersky announcements

- Exporting events to SIEM systems

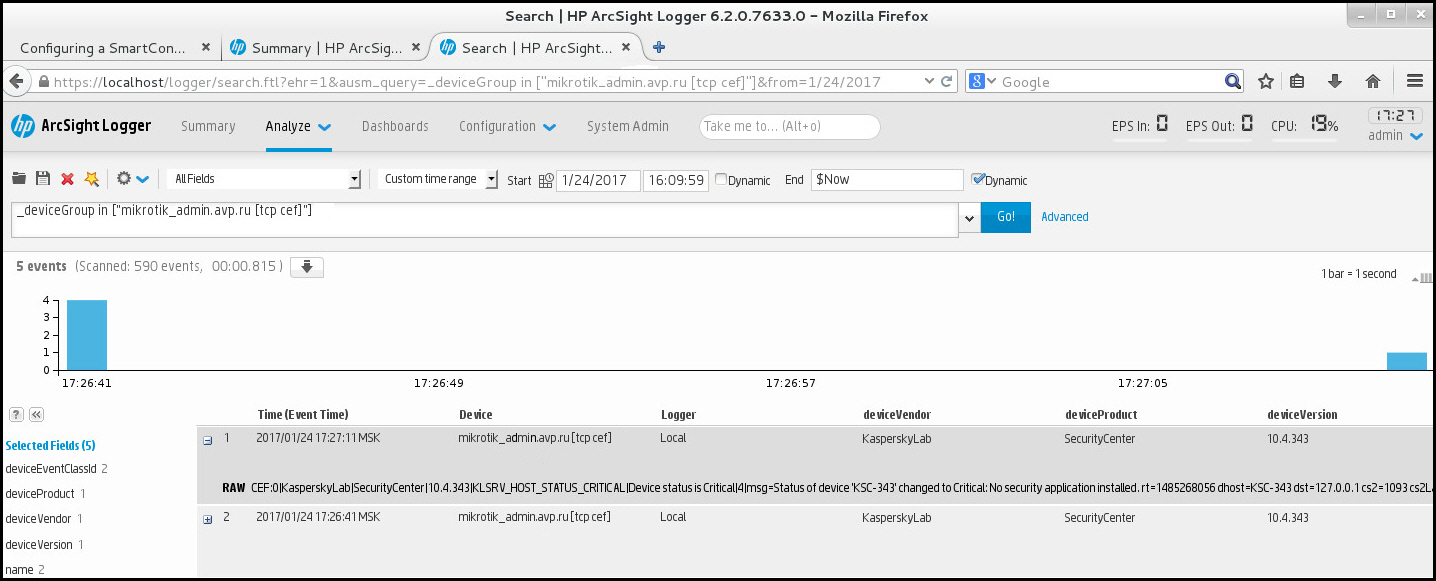

- Scenario: Configuring event export to SIEM systems

- Before you begin

- About event export

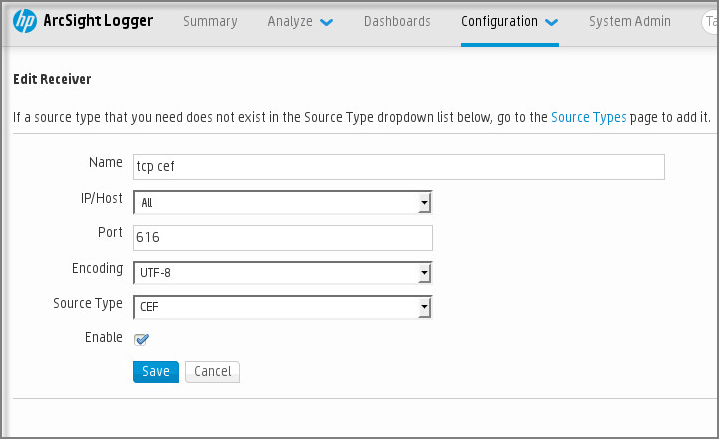

- About configuring event export in a SIEM system

- Marking of events for export to SIEM systems in Syslog format

- About exporting events using Syslog format

- Configuring Open Single Management Platform for export of events to a SIEM system

- Exporting events directly from the database

- Viewing export results

- Managing object revisions

- Rolling back an object to a previous revision

- Deletion of objects

- Downloading and deleting files from Quarantine and Backup

- Operation diagnostics of the Kaspersky Next XDR Expert components

- Multitenancy

- Contact Technical Support

- Known issues

- Appendices

- Commands for components manual starting and installing

- Integrity check of KUMA files

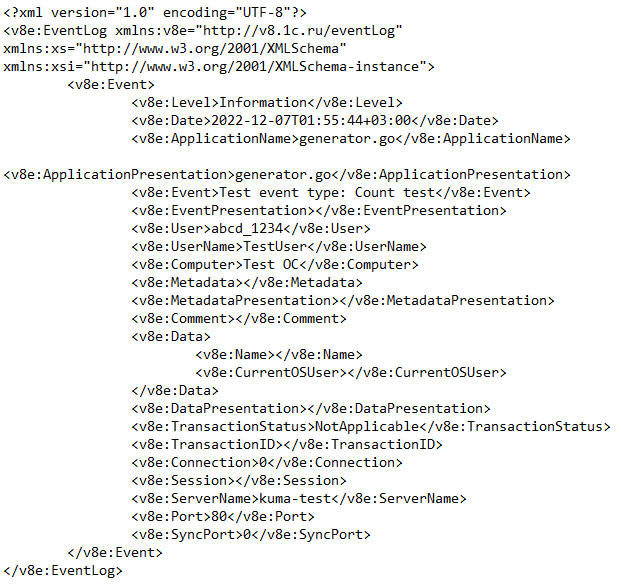

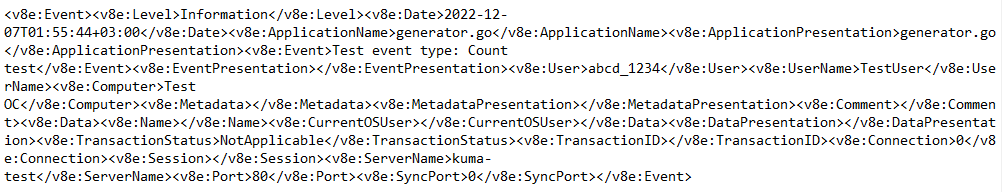

- Normalized event data model

- Configuring the data model of a normalized event from KATA EDR

- Asset data model

- User account data model

- KUMA audit events

- Event fields with general information

- User successfully signed in or failed to sign in

- User successfully logged out

- The user has successfully edited the set of fields settings to define sources

- Service was successfully created

- Service was successfully deleted

- Service was successfully started

- Service was successfully paired

- Service was successfully reloaded

- Service was successfully restarted

- Storage partition was deleted automatically due to expiration

- Storage partition was deleted by user

- Active list was successfully cleared or operation failed

- Active list item was successfully changed, or operation was unsuccessful

- Active list item was successfully deleted or operation was unsuccessful

- Active list was successfully imported or operation failed

- Active list was exported successfully

- Resource was successfully added

- Resource was successfully deleted

- Resource was successfully updated

- Asset was successfully created

- Asset was successfully deleted

- Asset category was successfully added

- Asset category was deleted successfully

- Settings were updated successfully

- The dictionary was successfully updated on the service or operation was unsuccessful

- Response in Active Directory

- Response via KICS for Networks

- Kaspersky Automated Security Awareness Platform response

- KEDR response

- Correlation rules

- Time format

- Mapping fields of predefined normalizers

- Glossary

- Administrator host

- Agent

- Alert

- Asset

- Bootstrap

- Collector

- Configuration file

- Context

- Correlation rule

- Correlator

- Custom actions

- Distribution package

- Event

- Incident

- Investigation graph

- Kaspersky Deployment Toolkit

- Kubernetes cluster

- KUMA inventory file

- KUMA services

- Multitenancy

- Node

- Normalized event

- Observables

- Playbook

- Playbook algorithm

- Registry

- Response actions

- Segmentation rules

- Storage

- Target hosts

- Tenant

- Threat development chain

- Transport archive

- Information about third-party code

- Trademark notices

Kaspersky Next XDR Expert Help

New features

New features

Key features

Key features

- Managing alerts and security incidents

- Threat hunting tools

- Investigation graph

- Predefined and custom playbooks

- Manual threat response actions

- Dashboard and widgets

Compatibility and hardware and software requirements

Compatibility and hardware and software requirements

- Hardware and software requirements

- Compatible applications and solutions

- Integration with other solutions and third-party systems

Getting started

Getting started

- Walk-through scenario of deployment, activation and initial configuration of Kaspersky Next XDR Expert

- Deployment of Kaspersky Next XDR Expert

- Migration to Kaspersky Next XDR Expert

- Using the threat monitoring, detection and hunting capabilities

- Example of incident investigation with Kaspersky Next XDR Expert

Working with Open Single Management Platform

Working with Open Single Management Platform

- Installing Kaspersky security applications on devices on a corporate network

- Remotely run scan and update tasks

- Managing the security policies of managed applications

What's new

Kaspersky Next XDR Expert 1.1

Kaspersky Next XDR Expert has several new features and improvements:

- An updated version of Bootstrap is used in the application. Before you install the new version of Kaspersky Next XDR Expert, update Bootstrap by running the following command:

./kdt apply -k <path_to_XDR_updates_archive> -i <path_to_configuration_file> --force-bootstrap - New design of the user interface.

- Reduced hardware and software requirements.

- Increased application stability.

- A new deployment wizard for the simplified configuration of the installation parameters.

- Addition of predefined playbooks.

- Kaspersky Next XDR Expert now supports the following EPP-applications:

- Kaspersky Endpoint Security 12.0 for Mac

- Kaspersky Industrial CyberSecurity for Nodes 3.2

- Kaspersky Endpoint Agent 3.16

- New Dashboard widgets for monitoring responses performed through playbooks.

- Migration from Kaspersky Security Center to Kaspersky Next XDR Expert, including migration of users and tenants, and the binding of tenants to Administration Servers of Kaspersky Security Center.

- Kaspersky Next XDR Expert is now compatible with Kaspersky Anti Targeted Attack Platform 6.0.

- New features and improvements introduced in the August 2024 update of Kaspersky Unified Monitoring and Analysis Platform.

About Kaspersky Next XDR Expert

Kaspersky Next XDR Expert (XDR) is a robust cybersecurity solution that defends your corporate IT infrastructure against sophisticated cyberthreats, including those that cannot be detected by EPP applications installed on corporate assets. It provides full visibility, correlation, and automation; and leverages a diverse range of response tools and data sources, including endpoint assets, and network and cloud data. To protect your IT infrastructure effectively, Kaspersky Next XDR Expert analyzes the data from these sources to identify threats, create alerts for potential incidents, and provide the tools to respond to them. Kaspersky XDR is backed by advanced analytics capabilities and a strong track record of security expertise.

This solution provides a unified detection and response process through integrated components and holistic scenarios in a single interface to improve the efficiency of security professionals.

The detection tools include:

- Threat hunting tools to proactively search for threats and vulnerabilities by analyzing events.

- Advanced threat detection and cross-correlation: real-time correlation of events from different sources, more than 350 correlation rules out-of-the-box for different scenarios with MITRE ATT&CK matrix mapping, ability to create new rules and customize existing ones, and retrospective scans for detecting zero-day vulnerabilities.

- An investigation graph to visualize and facilitate an incident investigation and identify the root causes of the alert.

- Use of Kaspersky Threat Intelligence Portal to get the latest detailed threat intelligence, for example, about web addresses, domains, IP addresses, file hashes, statistical and behavioral data, and WHOIS and DNS data.

The response tools include:

- Manual response actions: asset isolation, run commands, create prevention rules, launch tasks on an asset, Kaspersky Threat Intelligence Portal reputation enrichment, and training assignments for users.

- Playbooks, both predefined and user-created, to automate typical response operations.

- Third-party application response actions and cross-application response scenarios.

Kaspersky Next XDR Expert also takes advantage of the Open Single Management Platform component for asset management and the centralized run of security administration and maintenance tasks:

- Deploying Kaspersky applications on the assets in the corporate network.

- Remotely launching scan and update tasks.

- Obtaining detailed information about asset protection.

- Configuring all the security components by using Kaspersky applications.

Kaspersky Next XDR Expert supports the hierarchy of tenants.

Kaspersky Next XDR Expert is integrated with Active Directory, includes APIs, and supports a wide range of integrations both with Kaspersky applications and third-party solutions for data obtaining and responding. For information about the applications and solutions that XDR supports, see the Compatible Kaspersky applications and Integration with other solutions sections.

Updates functionality (including providing anti-virus signature updates and codebase updates), as well as KSN functionality may not be available in the software in the U.S.

Hardware and software requirements

This article describes hardware requirements of single node deployment scheme and distributed deployment scheme, software requirements of Open Single Management Platform, hardware and software requirements of Kaspersky Deployment Toolkit and OSMP components.

Single node deployment: hardware requirements

Single node scheme only supports up to 10,000 devices in the network.

Additional nodes are required for KATA/KEDR.

In case of single node deployment, it is strongly recommended that you first install the DBMS manually on the host that will act as a primary node. After that, you can deploy Kaspersky Next XDR Expert on the same host.

Minimum hardware requirements

Solution |

250 devices |

1000 devices |

3000 devices |

5000 devices |

10,000 devices |

|---|---|---|---|---|---|

A solution that includes the following applications:

|

Minimum solution configuration*: 1 target host |

Minimum solution configuration*: 1 target host |

Minimum solution configuration*: 1 target host |

Minimum solution configuration*: 1 target host |

Minimum solution configuration*: 1 target host |

|

|

|

|

|

|

A solution that includes the following applications:

|

Minimum solution configuration*:

|

Minimum solution configuration*:

|

Minimum solution configuration*:

|

Minimum solution configuration*:

|

Minimum solution configuration*:

|

1 target host:

1 CN host (KATA/KEDR):

1 Sandbox host (KATA/KEDR):

|

1 target host:

1 CN host (KATA/KEDR):

1 Sandbox host (KATA/KEDR):

|

1 target host:

1 CN host (KATA/KEDR):

1 Sandbox host (KATA/KEDR):

|

1 target host:

1 CN host (KATA/KEDR):

1 Sandbox host (KATA/KEDR):

|

1 target host:

1 CN host (KATA/KEDR):

1 Sandbox host (KATA/KEDR):

|

* The requirements do not take into account hosts for KUMA services. Refer to the following topic for details: Requirements for hosts with KUMA services.

To deploy the solution correctly, ensure that CPU of the target host supports the BMI, AVX, and SSE 4.2 instruction set.

Distributed deployment: hardware requirements

Multi-node cluster scheme is recommended for networks that exceed 10,000 devices.

Minimum hardware requirements

Solution |

20,000 devices |

30,000 devices |

|---|---|---|

A solution that includes the following applications:

|

Minimum solution configuration*:

|

Minimum solution configuration*:

|

Aggregate performance of target hosts:

|

Aggregate performance of target hosts:

|

|

Database host**:

|

Database host**:

|

|

A solution that includes the following applications:

|

Minimum solution configuration*:

|

Minimum solution configuration*:

|

Aggregate performance of target and KATA/KEDR hosts:

|

Aggregate performance of target and KATA/KEDR hosts:

|

|

Database host**:

|

Database host**:

|

* The requirements do not take into account hosts for KUMA services. Refer to the following topic for details: Requirements for hosts with KUMA services.

** The database can be hosted either inside the cluster or on a separate host outside the cluster.

To deploy the solution correctly, ensure that CPUs of target hosts support the BMI/AVX instruction set.

Open Single Management Platform: Software requirements

Software requirements and supported systems and platforms

Operating system |

64-bit versions of the following operating systems are supported: Astra Linux Special Edition RUSB.10015-01 (2023-0426SE17 update 1.7.4) Ubuntu Server 22.04 LTS Debian GNU/Linux 11.х (Bullseye) |

Virtualization platforms |

VMWare vSphere 7 VMWare vSphere 8 Microsoft Hyper-V Server 2016 Microsoft Hyper-V Server 2019 Microsoft Hyper-V Server 2022 Kernel-based Virtual Machine Proxmox Virtual Environment 7.2 Proxmox Virtual Environment 7.3 Nutanix AHV 20220304.242 and later |

Database management system (DBMS) |

PostgreSQL 13.х 64-bit PostgreSQL 14.х 64-bit PostgreSQL 15.х 64-bit Postgres Pro 13.х 64-bit (all editions) Postgres Pro 14.х 64-bit (all editions) Postgres Pro 15.х 64-bit (all editions) |

Kaspersky Deployment Toolkit

All Open Single Management Platform components are installed by using Kaspersky Deployment Toolkit.

Kaspersky Deployment Toolkit has the following hardware and software requirements:

Specification |

System requirements |

Hardware |

CPU: 4 cores, operating frequency of 2.5 GHz RAM: 8 GB Available disk space: 40 GB |

Operating system |

64-bit versions of the following operating systems are supported:

|

Open Single Management Platform components

To view the hardware and software requirements for an Open Single Management Platform component, click its name:

- OSMP Console

- Kaspersky Unified Monitoring and Analysis Platform (hereinafter KUMA)

- Secondary Kaspersky Security Center Administration Servers

- Kaspersky Security Center Network Agent

- Kaspersky Endpoint Security for Windows

- Kaspersky Anti Targeted Attack Platform (hereinafter KATA)

- Kaspersky Industrial CyberSecurity for Networks

- Kaspersky Industrial CyberSecurity for Nodes

- Kaspersky CyberTrace

- Kaspersky Threat Intelligence Portal

- Kaspersky Automated Security Awareness Platform (hereinafter KASAP)

Requirements for hosts with KUMA services

The KUMA services (collectors, correlators, and storages) are installed on the hosts that are outside of the Kubernetes cluster. Hardware and software requirements for these hosts are described in this article.

Recommended hardware and software requirements

This section lists the hardware and software requirements for processing a data stream of up to 40,000 events per second (EPS). The KUMA load value depends on the type of events being parsed and the efficiency of the normalizer.

For event processing efficiency, the CPU core count is more important than the clock rate. For example, 8 CPU cores with a medium clock rate can process events more efficiently than 4 CPU cores with a high clock rate. The table below lists the hardware and software requirements of KUMA components.

The amount of RAM utilized by the collector depends on configured enrichment methods (DNS, accounts, assets, enrichment with data from Kaspersky CyberTrace) and whether aggregation is used. RAM consumption is influenced by the data aggregation window setting, the number of fields used for aggregation of data, volume of data in fields being aggregated.

For example, with an event stream of 1000 EPS and event enrichment disabled (event enrichment is disabled, event aggregation is disabled, 5000 accounts, 5000 assets per tenant), one collector requires the following resources:

- 1 CPU core or 1 virtual CPU

- 512 MB of RAM

- 1 GB of disk space (not counting event cache)

For example, to support 5 collectors that do not perform event enrichment, you must allocate the following resources: 5 CPU cores, 2.5 GB of RAM, and 5 GB of free disk space.

Recommended hardware and software requirements for installation of the KUMA services

|

Collector |

Correlator |

Storage |

|---|---|---|---|

CPU |

Intel or AMD with SSE 4.2 support: at least 4 cores/8 threads or 8 virtual CPUs. |

Intel or AMD with SSE 4.2 support: at least 4 cores/8 threads or 8 virtual CPUs. |

Intel or AMD with SSE 4.2 support: at least 12 cores/24 threads or 24 virtual CPUs. |

RAM |

16 GB |

16 GB |

48 GB |

Free disk space |

/opt directory size: at least 500 GB. |

/opt directory size: at least 500 GB. |

/opt directory size: at least 500 GB. |

Operating systems |

|

||

Network bandwidth |

100 Mbps |

100 Mbps |

The transfer rate between ClickHouse nodes must be at least 10 Gbps if the data stream exceeds 20,000 EPS. |

Installation of KUMA is supported in the following virtual environments:

- VMware 6.5 or later

- Hyper-V for Windows Server 2012 R2 or later

- QEMU-KVM 4.2 or later

- Software package of virtualization tools "Brest" RDTSP.10001-02

Kaspersky recommendations for storage servers

For storage servers Kaspersky specialists recommend the following:

- Put ClickHouse on solid state drives (SSD). SSDs help improve data access speed. Hard drives can be used to store data using the HDFS technology.

- To connect a data storage system to storage servers, use high-speed protocols, such as Fibre Channel or iSCSI 10G. We do not recommend using application-level protocols such as NFS and SMB to connect data storage systems.

- Use the ext4 file system on ClickHouse cluster servers.

- If you are using RAID arrays, use RAID 0 for high performance, or RAID 10 for high performance and fault tolerance.

- To ensure fault tolerance and performance of the data storage subsystem, make sure that ClickHouse nodes are deployed strictly on different disk arrays.

- If you are using a virtualized infrastructure to host system components, deploy ClickHouse cluster nodes on different hypervisors. In this case, it is necessary to prevent two virtual machines with ClickHouse from working on the same hypervisor.

- For high-load KUMA installations, install ClickHouse on physical servers.

Requirements for devices for installing agents

To have data sent to the KUMA collector, you must install agents on the network infrastructure devices. Hardware and software requirements are listed in the table below.

Recommended hardware and software requirements for installation of agents

|

Windows devices |

Linux devices |

|---|---|---|

CPU |

Single-core, 1.4 GHz or higher |

Single-core, 1.4 GHz or higher |

RAM |

512 MB |

512 MB |

Free disk space |

1 GB |

1 GB |

Operating systems |

|

|

OSMP Console requirements

OSMP Console Server

For hardware and software requirements, refer to the requirements for a worker node.

Client devices

For a client device, use of OSMP Console requires only a browser.

The minimum screen resolution is 1366x768 pixels.

The hardware and software requirements for the device are identical to the requirements of the browser that is used with OSMP Console.

Browsers:

- Google Chrome 100.0.4896.88 or later (official build)

- Microsoft Edge 100 or later

- Safari 15 on macOS

- "Yandex" Browser 23.5.0.2271 or later

- Mozilla Firefox Extended Support Release 102.0 or later

Network Agent requirements

Minimum hardware requirements:

- CPU with operating frequency of 1 GHz or higher. For a 64-bit operating system, the minimum CPU frequency is 1.4 GHz.

- RAM: 512 MB.

- Available disk space: 1 GB.

Software requirement for Linux-based devices: the Perl language interpreter version 5.10 or higher must be installed.

The following operating systems are supported:

- Microsoft Windows Embedded POSReady 2009 with latest Service Pack 32-bit

- Microsoft Windows Embedded 7 Standard with Service Pack 1 32-bit/64-bit

- Microsoft Windows Embedded 8.1 Industry Pro 32-bit/64-bit

- Microsoft Windows 10 Enterprise 2015 LTSB 32-bit/64-bit

- Microsoft Windows 10 Enterprise 2016 LTSB 32-bit/64-bit

- Microsoft Windows 10 IoT Enterprise 2015 LTSB 32-bit/64-bit

- Microsoft Windows 10 IoT Enterprise 2016 LTSB 32-bit/64-bit

- Microsoft Windows 10 Enterprise 2019 LTSC 32-bit/64-bit

- Microsoft Windows 10 IoT Enterprise version 1703 32-bit/64-bit

- Microsoft Windows 10 IoT Enterprise version 1709 32-bit/64-bit

- Microsoft Windows 10 IoT Enterprise version 1803 32-bit/64-bit

- Microsoft Windows 10 IoT Enterprise version 1809 32-bit/64-bit

- Microsoft Windows 10 20H2 IoT Enterprise 32-bit/64-bit

- Microsoft Windows 10 21H2 IoT Enterprise 32-bit/64-bit

- Microsoft Windows 10 IoT Enterprise 32-bit/64-bit

- Microsoft Windows 10 IoT Enterprise version 1909 32-bit/64-bit

- Microsoft Windows 10 IoT Enterprise LTSC 2021 32-bit/64-bit

- Microsoft Windows 10 IoT Enterprise version 1607 32-bit/64-bit

- Microsoft Windows 10 Home RS3 (Fall Creators Update, v1709) 32-bit/64-bit

- Microsoft Windows 10 Pro RS3 (Fall Creators Update, v1709) 32-bit/64-bit

- Microsoft Windows 10 Pro for Workstations RS3 (Fall Creators Update, v1709) 32-bit/64-bit

- Microsoft Windows 10 Enterprise RS3 (Fall Creators Update, v1709) 32-bit/64-bit

- Microsoft Windows 10 Education RS3 (Fall Creators Update, v1709) 32-bit/64-bit

- Microsoft Windows 10 Home RS4 (April 2018 Update, 17134) 32-bit/64-bit

- Microsoft Windows 10 Pro RS4 (April 2018 Update, 17134) 32-bit/64-bit

- Microsoft Windows 10 Pro for Workstations RS4 (April 2018 Update, 17134) 32-bit/64-bit

- Microsoft Windows 10 Enterprise RS4 (April 2018 Update, 17134) 32-bit/64-bit

- Microsoft Windows 10 Education RS4 (April 2018 Update, 17134) 32-bit/64-bit

- Microsoft Windows 10 Home RS5 (October 2018) 32-bit/64-bit

- Microsoft Windows 10 Pro RS5 (October 2018) 32-bit/64-bit

- Microsoft Windows 10 Pro for Workstations RS5 (October 2018) 32-bit/64-bit

- Microsoft Windows 10 Enterprise RS5 (October 2018) 32-bit/64-bit

- Microsoft Windows 10 Education RS5 (October 2018) 32-bit/64-bit

- Microsoft Windows 10 Home 19H1 32-bit/64-bit

- Microsoft Windows 10 Pro 19H1 32-bit/64-bit

- Microsoft Windows 10 Pro for Workstations 19H1 32-bit/64-bit

- Microsoft Windows 10 Enterprise 19H1 32-bit/64-bit

- Microsoft Windows 10 Education 19H1 32-bit/64-bit

- Microsoft Windows 10 Home 19H2 32-bit/64-bit

- Microsoft Windows 10 Pro 19H2 32-bit/64-bit

- Microsoft Windows 10 Pro for Workstations 19H2 32-bit/64-bit

- Microsoft Windows 10 Enterprise 19H2 32-bit/64-bit

- Microsoft Windows 10 Education 19H2 32-bit/64-bit

- Microsoft Windows 10 Home 20H1 (May 2020 Update) 32-bit/64-bit

- Microsoft Windows 10 Pro 20H1 (May 2020 Update) 32-bit/64-bit

- Microsoft Windows 10 Enterprise 20H1 (May 2020 Update) 32-bit/64-bit

- Microsoft Windows 10 Education 20H1 (May 2020 Update) 32-bit/64-bit

- Microsoft Windows 10 Home 20H2 (October 2020 Update) 32-bit/64-bit

- Microsoft Windows 10 Pro 20H2 (October 2020 Update) 32-bit/64-bit

- Microsoft Windows 10 Enterprise 20H2 (October 2020 Update) 32-bit/64-bit

- Microsoft Windows 10 Education 20H2 (October 2020 Update) 32-bit/64-bit

- Microsoft Windows 10 Home 21H1 (May 2021 Update) 32-bit/64-bit

- Microsoft Windows 10 Pro 21H1 (May 2021 Update) 32-bit/64-bit

- Microsoft Windows 10 Enterprise 21H1 (May 2021 Update) 32-bit/64-bit

- Microsoft Windows 10 Education 21H1 (May 2021 Update) 32-bit/64-bit

- Microsoft Windows 10 Home 21H2 (October 2021 Update) 32-bit/64-bit

- Microsoft Windows 10 Pro 21H2 (October 2021 Update) 32-bit/64-bit

- Microsoft Windows 10 Enterprise 21H2 (October 2021 Update) 32-bit/64-bit

- Microsoft Windows 10 Education 21H2 (October 2021 Update) 32-bit/64-bit

- Microsoft Windows 10 Home 22H2 (October 2023 Update) 32-bit/64-bit

- Microsoft Windows 10 Pro 22H2 (October 2023 Update) 32-bit/64-bit

- Microsoft Windows 10 Enterprise 22H2 (October 2023 Update) 32-bit/64-bit

- Microsoft Windows 10 Education 22H2 (October 2023 Update) 32-bit/64-bit

- Microsoft Windows 11 Home 64-bit

- Microsoft Windows 11 Pro 64-bit

- Microsoft Windows 11 Enterprise 64-bit

- Microsoft Windows 11 Education 64-bit

- Microsoft Windows 11 22H2

- Microsoft Windows 8.1 Pro 32-bit/64-bit

- Microsoft Windows 8.1 Enterprise 32-bit/64-bit

- Microsoft Windows 8 Pro 32-bit/64-bit

- Microsoft Windows 8 Enterprise 32-bit/64-bit

- Microsoft Windows 7 Professional with Service Pack 1 and later 32-bit/64-bit

- Microsoft Windows 7 Enterprise/Ultimate with Service Pack 1 and later 32-bit/64-bit

- Microsoft Windows 7 Home Basic/Premium with Service Pack 1 and later 32-bit/64-bit

- Microsoft Windows XP Professional with Service Pack 2 32-bit/64-bit (supported by Network Agent version 10.5.1781 only)

- Microsoft Windows XP Professional with Service Pack 3 and later 32-bit (supported by Network Agent version 14.0.0.20023)

- Microsoft Windows XP Professional for Embedded Systems with Service Pack 3 32-bit (supported by Network Agent version 14.0.0.20023)

- Windows MultiPoint Server 2011 Standard/Premium 64-bit

- Windows Server 2003 SP1 32-bit/64-bit (supported only by Network Agent version 10.5.1781, which you can request through Technical Support)

- Windows Server 2008 Foundation with Service Pack 2 32-bit/64-bit

- Windows Server 2008 with Service Pack 2 (all editions) 32-bit/64-bit

- Windows Server 2008 R2 Datacenter with Service Pack 1 and later 64-bit

- Windows Server 2008 R2 Enterprise with Service Pack 1 and later 64-bit

- Windows Server 2008 R2 Foundation with Service Pack 1 and later 64-bit

- Windows Server 2008 R2 Core Mode with Service Pack 1 and later 64-bit

- Windows Server 2008 R2 Standard with Service Pack 1 and later 64-bit

- Windows Server 2008 R2 with Service Pack 1 (all editions) 64-bit

- Windows Server 2012 Server Core 64-bit

- Windows Server 2012 Datacenter 64-bit

- Windows Server 2012 Essentials 64-bit

- Windows Server 2012 Foundation 64-bit

- Windows Server 2012 Standard 64-bit

- Windows Server 2012 R2 Server Core 64-bit

- Windows Server 2012 R2 Datacenter 64-bit

- Windows Server 2012 R2 Essentials 64-bit

- Windows Server 2012 R2 Foundation 64-bit

- Windows Server 2012 R2 Standard 64-bit

- Windows Server 2016 Datacenter (LTSB) 64-bit

- Windows Server 2016 Standard (LTSB) 64-bit

- Windows Server 2016 Server Core (Installation Option) (LTSB) 64-bit

- Windows Server 2019 Standard 64-bit

- Windows Server 2019 Datacenter 64-bit

- Windows Server 2019 Core 64-bit

- Windows Server 2022 Standard 64-bit

- Windows Server 2022 Datacenter 64-bit

- Windows Server 2022 Core 64-bit

- Debian GNU/Linux 10.х (Buster) 32-bit/64-bit

- Debian GNU/Linux 11.х (Bullseye) 32-bit/64-bit

- Debian GNU/Linux 12 (Bookworm) 32-bit/64-bit

- Ubuntu Server 18.04 LTS (Bionic Beaver) 32-bit/64-bit

- Ubuntu Server 20.04 LTS (Focal Fossa) 32-bit/64-bit

- Ubuntu Server 22.04 LTS (Jammy Jellyfish) 64-bit

- CentOS 7.x 64-bit

- CentOS Stream 9 64-bit

- Red Hat Enterprise Linux Server 6.x 32-bit/64-bit

- Red Hat Enterprise Linux Server 7.x 64-bit

- Red Hat Enterprise Linux Server 8.x 64-bit

- Red Hat Enterprise Linux Server 9.x 64-bit

- SUSE Linux Enterprise Server 12 (all Service Packs) 64-bit

- SUSE Linux Enterprise Server 15 (all Service Packs) 64-bit

- SUSE Linux Enterprise Desktop 15 with Service Pack 3 ARM 64-bit

- openSUSE 15 64-bit

- EulerOS 2.0 SP8 ARM 64-bit

- Astra Linux Special Edition RUSB.10015-01 (operational update 1.6) 64-bit

- Astra Linux Special Edition RUSB.10015-01 (operational update 1.7) 64-bit

- Astra Linux Special Edition RUSB.10015-01 (operational update 1.8) 64-bit

- Astra Linux Common Edition (operational update 2.12) 64-bit

- Astra Linux Special Edition RUSB.10152-02 (operational update 4.7) ARM 64-bit

- ALT SP Server 10 64-bit

- ALT SP Workstation 10 64-bit

- ALT Server 10 64-bit

- ALT Server 9.2 64-bit

- ALT Workstation 9.2 32-bit/64-bit

- ALT Workstation 10 32-bit/64-bit

- ALT 8 SP Server (LKNV.11100-01) 64-bit

- ALT 8 SP Server (LKNV.11100-02) 64-bit

- ALT 8 SP Server (LKNV.11100-03) 64-bit

- ALT 8 SP Workstation (LKNV.11100-01) 32-bit/64-bit

- ALT 8 SP Workstation (LKNV.11100-02) 32-bit/64-bit

- ALT 8 SP Workstation (LKNV.11100-03) 32-bit/64-bit

- Mageia 4 32-bit

- Oracle Linux 7 64-bit

- Oracle Linux 8 64-bit

- Oracle Linux 9 64-bit

- Linux Mint 20.x 64-bit

- AlterOS 7.5 and later 64-bit

- GosLinux IC6 64-bit

- RED OS 7.3 Server 64-bit

- RED OS 7.3 Certified Edition 64-bit

- ROSA COBALT 7.9 64-bit

- ROSA CHROME 12 64-bit

- macOS Big Sur (11.x)

- macOS Monterey (12.x)

- macOS Ventura (13.x)

- macOS Sonoma (14.x)

For Network Agent, the Apple Silicon (M1) architecture is also supported, as well as Intel.

The following virtualization platforms are supported:

- VMware vSphere 6.7

- VMware vSphere 7.0

- VMware vSphere 8.0

- VMware Workstation 16 Pro

- VMware Workstation 17 Pro

- Microsoft Hyper-V Server 2012 64-bit

- Microsoft Hyper-V Server 2012 R2 64-bit

- Microsoft Hyper-V Server 2016 64-bit

- Microsoft Hyper-V Server 2019 64-bit

- Microsoft Hyper-V Server 2022 64-bit

- Citrix XenServer 7.1 LTSR

- Citrix XenServer 8.x

- Parallels Desktop 17

- Oracle VM VirtualBox 6.x

- Oracle VM VirtualBox 7.x

- Kernel-based Virtual Machine (all Linux operating systems supported by Network Agent)

On the devices running Windows 10 version RS4 or RS5, Kaspersky Security Center might be unable to detect some vulnerabilities in folders where case sensitivity is enabled.

Before installing Network Agent on the devices running Windows 7, Windows Server 2008, Windows Server 2008 R2 or Windows MultiPoint Server 2011, make sure that you have installed the security update KB3063858 for OS Windows (Security Update for Windows 7 (KB3063858), Security Update for Windows 7 for x64-based Systems (KB3063858), Security Update for Windows Server 2008 (KB3063858), Security Update for Windows Server 2008 x64 Edition (KB3063858), Security Update for Windows Server 2008 R2 x64 Edition (KB3063858).

In Microsoft Windows XP, Network Agent might not perform some operations correctly.

You can install or update Network Agent for Windows XP in Microsoft Windows XP only. The supported editions of Microsoft Windows XP and their corresponding versions of the Network Agent are listed in the list of supported operating systems. You can download the required version of the Network Agent for Microsoft Windows XP from this page.

We recommend that you install the same version of the Network Agent for Linux as Open Single Management Platform.

Open Single Management Platform fully supports Network Agent of the same or newer versions.

Network Agent for macOS is provided together with Kaspersky security application for this operating system.

Page top

Compatible applications and solutions

Kaspersky Next XDR Expert can be integrated with the following versions of applications and solutions:

- Kaspersky Security Center 15 Linux (as secondary Administration Servers)

- Kaspersky Security Center 14.2 Windows (as secondary Administration Servers)

- Kaspersky Anti Targeted Attack Platform 5.1

- Kaspersky Anti Targeted Attack Platform 6.0

- Kaspersky Endpoint Security for Windows 12.3 or later (supports file servers)

- Kaspersky Endpoint Security 12.4 for Windows

- Kaspersky Endpoint Security 12.0 for Mac

- Kaspersky CyberTrace 4.2 (integration can only be configured in the KUMA Console)

- Kaspersky Industrial CyberSecurity for Nodes 3.2 or later

- Kaspersky Endpoint Agent 3.16

- Kaspersky Industrial CyberSecurity for Networks 4.0 (integration can only be configured in the KUMA Console)

- Kaspersky Secure Mail Gateway 2.0 and later (integration can only be configured in the KUMA Console)

- Kaspersky Security for Linux Mail Server 10 and later (integration can only be configured in the KUMA Console)

- Kaspersky Web Traffic Security 6.0 and later (integration can only be configured in the KUMA Console)

- UserGate 7

- Kaspersky Automated Security Awareness Platform

- Kaspersky Threat Intelligence Portal

Refer to the Application Support Lifecycle webpage for the versions of the applications.

Known issues

Open Single Management Platform supports management of Kaspersky Endpoint Security for Windows with the following limitations:

- The Adaptive Anomaly Control component is not supported. Open Single Management Platform does not support Adaptive Anomaly Control rules.

- Kaspersky Sandbox components are not supported.

- The Seamless updates functionality is not available.

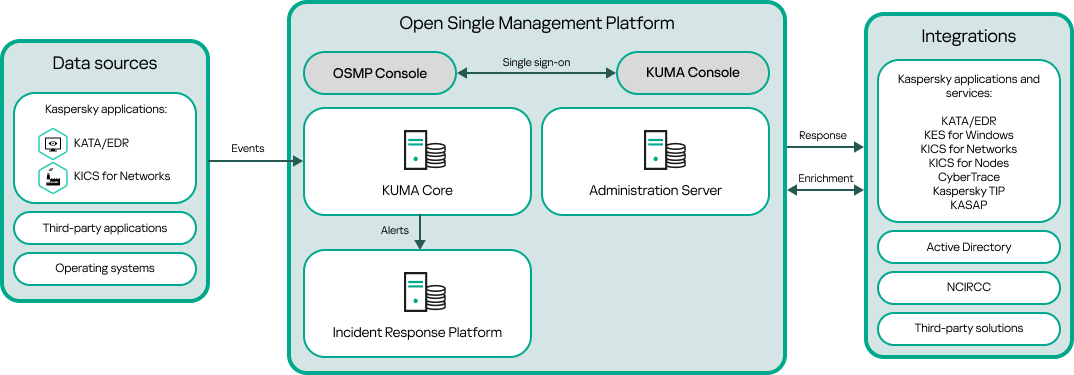

Architecture of Kaspersky Next XDR Expert

This section provides a description of the components of Kaspersky Next XDR Expert and their interaction.

Kaspersky Next XDR Expert architecture

Kaspersky Next XDR Expert comprises the following main components:

- Open Single Management Platform (OSMP). The technology basis on which Kaspersky Next XDR Expert is built. OSMP integrates all of the solution components and provides interaction between the components. OSMP is scalable and supports integration with both Kaspersky applications and third-party solutions.

- OSMP Console. Provides a web interface for OSMP.

- KUMA Console. Provides a web interface for Kaspersky Unified Monitoring and Analysis Platform (KUMA).

- KUMA Core. The central component of KUMA. KUMA receives, processes, and stores information security events and then analyzes the events by using correlation rules. As a result of the analysis, if the conditions of a correlation rule are met, KUMA creates an alert and sends it to Incident Response Platform.

- Incident Response Platform. A Kaspersky Next XDR Expert component that allows you to create incidents automatically or manually, manage alert and incident life cycle, assign alerts and incidents to SOC analysts, and respond to the incidents automatically or manually, including responses through playbooks.

- Administration Server (also referred to as Server). The key component of endpoint protection of a client organization. Administration Server provides centralized deployment and management of endpoint protection through EPP-applications, and allows you to monitor the endpoint protection status.

- Data sources. Information security hardware and software that generates the events. After you integrate Kaspersky Next XDR Expert with the required data sources, KUMA receives the events to store and analyze them.

- Integrations. Kaspersky applications and third-party solutions integrated with OSMP. Through integrated solutions, an SOC analyst can enrich the data required for incident investigation, and then respond to incidents.

OSMP Console interface

Kaspersky Next XDR Expert is managed through the OSMP Console and KUMA Console interfaces.

The OSMP Console window contains the following items:

- Main menu in the left part of the window

- Work area in the right part of the window

Main menu

The main menu contains the following sections:

- Administration Server. Displays the name of the Administration Server that you are currently connected to. Click the settings icon (

) to open the Administration Server properties.

) to open the Administration Server properties. - Monitoring & Reporting. Provides an overview of your infrastructure, protection statuses, and statistics, including threat hunting, alerts and incidents, and playbooks.

- Assets (Devices). Contains tools for assets, as well as tasks and Kaspersky application policies.

- Users & Roles. Allows you to manage users and roles, configure user rights by assigning roles to the users, and associate policy profiles with roles.

- Operations. Contains a variety of operations, including application licensing, viewing and managing encrypted drives and encryption events, and third-party application management. This also provides you access to application repositories.

- Discovery & Deployment. Allows you to poll the network to discover client devices, and distribute the devices to administration groups manually or automatically. This section also contains the quick start wizard and Protection deployment wizard.

- Marketplace. Contains information about the entire range of Kaspersky business solutions and allows you to select the ones you need, and then proceed to purchase those solutions at the Kaspersky website.

- Settings. Contains settings to integrate Kaspersky Next XDR Expert with other Kaspersky applications and allows you to go to the KUMA Console. It also contains your personal settings related to the interface appearance, such as interface language or theme.

- Your account menu. Contains a link to Kaspersky Next XDR Expert Help. It also allows you to sign out of Kaspersky Next XDR Expert, and view the OSMP Console version and the list of installed management web plug-ins.

Work area

The work area displays the information you choose to view in the sections of the OSMP Console interface window. It also contains control elements that you can use to configure how the information is displayed.

Pinning and unpinning sections of the main menu

You can pin sections of OSMP Console to add them to favorites and access them quickly from the Pinned section in the main menu.

If there are no pinned elements, the Pinned section is not displayed in the main menu.

You can pin sections that display pages only. For example, if you go to Assets (Devices) → Managed devices, a page with the table of devices opens, which means you can pin the Managed devices section. If a window or no element is displayed after you select the section in the main menu, then you cannot pin such a section.

To pin a section:

- In the main menu, hover the mouse cursor over the section you want to pin.

The pin (

) icon is displayed.

) icon is displayed. - Click the pin (

) icon.

) icon.

The section is pinned and displayed in the Pinned section.

The maximum number of elements that you can pin is five.

You can also remove elements from favorites by unpinning them.

To unpin a section:

- In the main menu, go to the Pinned section.

- Hover the mouse cursor over the section you want to unpin, and then click the unpin (

) icon.

) icon.

The section is removed from favorites.

Page top

Changing the language of the OSMP Console interface

You can select the language of the OSMP Console interface.

To change the interface language:

- In the main menu, go to Settings → Language.

- Select one of the supported localization languages.

Licensing

This section covers the main aspects of Open Single Management Platform licensing.

About the End User License Agreement

The End User License Agreement (License Agreement) is a binding agreement between you and AO Kaspersky Lab stipulating the terms on which you may use the application.

Carefully read the License Agreement before you start using the application.

You can view the terms of the End User License Agreement by using the following methods:

- During installation of Open Single Management Platform.

- By reading the license.txt document. This document is included in the application distribution kit.

You accept the terms of the End User License Agreement by confirming that you agree with the End User License Agreement when installing the application. If you do not accept the terms of the License Agreement, cancel application installation and do not use the application.

Page top

About the license key

A license key is a sequence of bits that you can apply to activate and then use the application in accordance with the terms of the End User License Agreement. License keys are generated by Kaspersky specialists.

You can add a license key to the application using one of the following methods: by applying a key file or by entering an activation code. The license key is displayed in the application interface as a unique alphanumeric sequence after you add it to the application.

The license key may be blocked by Kaspersky in case the terms of the License Agreement have been violated. If the license key has been blocked, you need to add another one if you want to use the application.

A license key may be active or additional (or reserve).

An active license key is a license key that is currently used by the application. An active license key can be added for a trial or commercial license. The application cannot have more than one active license key.

An additional (or reserve) license key is a license key that entitles the user to use the application, but is not currently in use. The additional license key automatically becomes active when the license associated with the current active license key expires. An additional license key can be added only if an active license key has already been added.

A license key for a trial license can be added as an active license key. A license key for a trial license cannot be added as an additional license key.

Page top

About the activation code

An activation code is a unique sequence of 20 letters and numbers. You have to enter an activation code in order to add a license key for activating Open Single Management Platform. You receive the activation code at the email address that you provided when you bought Open Single Management Platform or requested the trial version of Open Single Management Platform.

To activate the application by using the activation code, you need internet access in order to connect to Kaspersky activation servers.

If you have lost your activation code after installing the application, contact the Kaspersky partner from whom you purchased the license.

Page top

About the key file

A key file is a file with the .key extension provided to you by Kaspersky. Key files are designed to activate the application by adding a license key.

You receive a key file at the email address that you provided when you bought Open Single Management Platform or ordered the trial version of Open Single Management Platform.

You do not need to connect to Kaspersky activation servers in order to activate the application with a key file.

You can restore a key file if it has been accidentally deleted. You may need a key file to register a Kaspersky CompanyAccount, for example.

To restore your key file, perform any of the following actions:

- Contact the license seller.

- Receive a key file through Kaspersky website by using your available activation code.

License limits

When you purchase a Kaspersky Next XDR Expert license, you determine the number of users you want to protect. You can exceed the license limit by no more than 5%. If you exceed the license limit by more than 5%, the extra devices and extra accounts are added to the Restricted assets list.

If the license limit is exceeded, a notification appears at the top of the OSMP Console.

It is not possible to launch response actions or playbooks for restricted assets.

To view the list of restricted assets:

- In the main menu, go to Settings → Tenants.

- In the Tenants section, click the Root tenant.

The Root tenant's properties window opens.

- Select the Licenses tab.

- Click the link with the number of restricted assets.

The Restricted assets window opens.

The list shows a maximum of 2000 restricted assets.

Page top

Activating Kaspersky Next XDR Expert

After you install Kaspersky Next XDR Expert, you must activate the application in the Administration Server properties.

To activate Kaspersky Next XDR Expert:

- In the main menu, click the settings icon (

) next to the name of the root Administration Server.

) next to the name of the root Administration Server.The Administration Server properties window opens.

- On the General tab, select the License keys section.

- Under Current license, click the Select button.

- In the window that opens, select the license key that you want to use to activate Kaspersky Next XDR Expert. If the license key is not listed, click the Add new license key button, and then specify a new license key.

- If necessary, you can also add a . To do this, under Reserve license key, click the Select button, and then select an existing license key or add a new one. Note that you cannot add a reserve license key if there is no active license key.

- Click the Save button.

Viewing information about license keys in use

To view active and reserve license keys:

- In the main menu, go to Settings → Tenants.

- In the Tenants section, click the root tenant.

The root tenant's properties window opens.

- Select the Licenses tab.

The active and reserve license keys are displayed.

The displayed license key is applied to all child tenants of the root tenant. Specifying a separate license key for a child tenant is not available. The properties window for child tenants does not include the Licenses tab.

If the license keys limit is exceeded, a notification is shown, and the information about the license key shows a warning.

You can click the Go to Administration Server button to manage Kaspersky Next XDR Expert license keys.

On the Licenses tab, you can also view the list of licensed objects. To do this, click the ![]() button.

button.

The availability of the licensed object depends on the purchased license type. For more information about license types, see Licensing and features of Kaspersky Next XDR Expert.

Page top

Renewing licenses for Kaspersky applications

You can renew licenses for Kaspersky Next XDR Expert and included Kaspersky applications, such as Kaspersky Unified Monitoring and Analysis Platform, and Kaspersky Endpoint Detection and Response Expert. You can renew licenses that have expired or are going to expire within 30 days.

An email with an archive containing the new license keys will be sent to your email address after you purchase a new Kaspersky Next XDR Expert license.

To renew a license of Kaspersky Next XDR Expert:

- Extract the new license keys from the archive sent to your email address.

- Follow the steps described in Activating Kaspersky Next XDR Expert.

The license is renewed.

If you need to renew the licenses of the included Kaspersky applications, you must add new license keys to the web interfaces of these solutions.

For how to renew a license of Kaspersky Unified Monitoring and Analysis Platform, see the Adding a license key to the program web interface section of the Kaspersky Unified Monitoring and Analysis Platform Help.

For how to renew a license of Kaspersky Endpoint Detection and Response Expert, see the Adding a key section of the Kaspersky Anti Targeted Attack Platform Help.

In OSMP Console, the notifications are displayed when a license is about to expire, according to the following schedule:

- 30 days before the expiration

- 7 days before the expiration

- 3 days before the expiration

- 24 hours before the expiration

- When a license has expired

About data provision

Data processed locally

Kaspersky Next XDR Expert is designed to optimize threat detection, incident investigation, threat response (including automatic), and proactive threat hunting in real time.

Kaspersky Next XDR Expert performs the following main functions:

- Receiving, processing, and storing information security events.

- Analysis and correlation of incoming data.

- Incidents and alerts investigation, manual response.

- Automatic response by using the predefined and custom playbooks.

- Event-based threat hunting in real time.

To perform its main functions, Kaspersky Next XDR Expert can receive, store and process the following information:

- Information about the devices on which all Kaspersky Next XDR Expert components are installed:

- Technical specifications: device name, MAC address, operating system vendor, operating system build number, OS kernel version, required installed packages, account rights, service management tool type, and port status. This data is collected by Kaspersky Deployment Toolkit during installation.

- Technical specifications: IPv4 address. This data is specified by the user in the Kaspersky Deployment Toolkit configuration file.

- Device access data: account names and SSH keys. This data is specified by the user in the Kaspersky Deployment Toolkit configuration file.

- Database access data: IP/DNS name, port, user name, and password. This data is specified by the user in the Kaspersky Deployment Toolkit configuration file.

- KUMA inventory and license keys. This data is specified by the user in the Kaspersky Deployment Toolkit configuration file.

- DNS zone. This data is specified by the user in the Kaspersky Deployment Toolkit configuration file.

- Certificates for secure connection of devices to OSMP components. This data is specified by the user in the Kaspersky Deployment Toolkit configuration file.

Information is saved in the installation log, which is stored in the Kaspersky Deployment Toolkit database. The installation log of the initial infrastructure is saved to a file on the user's device. The storage period is indefinite; the installation log file will be deleted when Kaspersky Next XDR Expert is uninstalled. User names and passwords are stored in an encrypted form.

- Information about user accounts: full name and email address. The user enters data in the OSMP and KUMA Consoles. The data is stored in the database until the user deletes it.

- Information about tenants: tenant name, parent tenant name, description. The user enters data in the OSMP and KUMA Consoles. The data is stored in the database until the user deletes it.

- Alerts and incidents data:

- Alert data: triggered rules, compliance with the MITRE matrix, alert status, resolution, assigned operator, affected assets (devices and accounts), observables (IP, MD5, SHA256, URL, DNS domain, or DNS name) user name, host name, comments, and the changelog. This information is generated in the OSMP Console automatically, based on correlation events obtained from Kaspersky Unified Monitoring and Analysis Platform.

- Incident data: linked alerts, triggered rules, compliance with the MITRE matrix, incident status, resolution, affected assets (devices and accounts), observables (from the alert), comments, and the changelog. This information is generated in the OSMP Console automatically, according to the rules or manually by the user.

- Data on configuring the segmentation rules for generating incidents from alerts: the name and the rule triggering conditions, the template for the name of a new incident, a rule description, and the rule launch priority. The user enters data in the OSMP Console.

- Information about notification templates: template name, message subject, message template, template description, and detection rules. When the detection rules are triggered, notifications are sent. The user enters data in the OSMP Console.

The above data is stored in the database until the user deletes it.

- Playbook data:

- Playbook operational data, including data on response action parameters: name, description, tags, trigger, and algorithm. The user enters data in the OSMP console.

- Data on the execution of response actions within a playbook: data from integrated systems, data from devices.

- The full response history of alerts and incidents.

The data listed above is stored in the database for three days and then deleted. Data is completely deleted when Kaspersky Next XDR Expert is uninstalled.

- Integration settings data (both with Kaspersky solutions or services, and with third-party solutions that participate in Kaspersky Next XDR Expert scenarios):