Contents

- User guide

- KUMA resources

- Operations with resources

- Destinations

- Working with events

- Filtering and searching events

- Selecting Storage

- Generating an SQL query using a builder

- Manually creating an SQL query

- Filtering events by period

- Displaying names instead of IDs

- Presets

- Limiting the complexity of queries in alert investigation mode

- Saving and selecting events filter configuration

- Deleting event filter configurations

- Supported ClickHouse functions

- Viewing event detail areas

- Exporting events

- Configuring the table of events

- Refreshing events table

- Getting events table statistics

- Viewing correlation event details

- Filtering and searching events

- Normalizers

- Aggregation rules

- Enrichment rules

- Correlation rules

- Filters

- Active lists

- Viewing the table of active lists

- Adding active list

- Viewing the settings of an active list

- Changing the settings of an active list

- Duplicating the settings of an active list

- Deleting an active list

- Viewing records in the active list

- Searching for records in the active list

- Adding a record to an active list

- Duplicating records in the active list

- Changing a record in the active list

- Deleting records from the active list

- Import data to an active list

- Exporting data from the active list

- Predefined active lists

- Dictionaries

- Response rules

- Notification templates

- Connectors

- Secrets

- Segmentation rules

- Example of incident investigation with KUMA

- Incident conditions

- Step 1. Preliminary steps

- Step 2. Assigning an alert to a user

- Step 3. Check if the triggered correlation rule matches the data of the alert events

- Step 4. Analyzing alert information

- Step 5. False positive check

- Step 6. Determining alert severity

- Step 7. Incident creation

- Step 8. Investigation

- Step 9. Searching for related assets

- Step 10. Searching for related events

- Step 11. Recording the causes of the incident

- Step 12. Incident response

- Step 13. Restoring assets operability

- Step 14. Closing the incident

- Analytics

- Dashboard

- Reports

- Widgets

- Working with alerts

- Working with incidents

- About the incidents table

- Saving and selecting incident filter configuration

- Deleting incident filter configurations

- Viewing information about an incident

- Incident creation

- Incident processing

- Changing incidents

- Automatic linking of alerts to incidents

- Categories and types of incidents

- Interaction with RuCERT

- Special consideration for successful export from the KUMA hierarchical structure to RuCERT

- Exporting data to RuCERT

- Supplementing incident data on request

- Sending files to RuCERT

- Sending incidents involving personal information leaks to RuCERT

- Communication with RuCERT experts

- Supported categories and types of RuCERT incidents

- Notifications about the incident status change in RuCERT

- Retroscan

- KUMA resources

KUMA resources

Resources are KUMA components that contain parameters for implementing various functions: for example, establishing a connection with a given web address or converting data according to certain rules. Like parts of an erector set, these components are assembled into resource sets for services that are then used as the basis for creating KUMA services.

Resources are contained in the Resources section, Resources block of KUMA web interface. The following resource types are available:

- Correlation rules—resources of this type contain rules for identifying event patterns that indicate threats. If the conditions specified in these resources are met, a correlation event is generated.

- Normalizers—resources of this type contain rules for converting incoming events into the format used by KUMA. After processing in the normalizer, the "raw" event becomes normalized and can be processed by other KUMA resources and services.

- Connectors—resources of this type contain settings for establishing network connections.

- Aggregation rules—resources of this type contain rules for combining several basic events of the same type into one aggregation event.

- Enrichment rules—resources of this type contain rules for supplementing events with information from third-party sources.

- Destinations—resources of this type contain settings for forwarding events to a destination for further processing or storage.

- Filters—resources of this type contain conditions for rejecting or selecting individual events from the stream of events.

- Response rules—resources of this type are used in correlators to, for example, execute scripts or launch Kaspersky Security Center tasks when certain conditions are met.

- Notification templates—resources of this type are used when sending notifications about new alerts.

- Active lists—resources of this type are used by correlators for dynamic data processing when analyzing events according to correlation rules.

- Dictionaries—resources of this type are used to store keys and their values, which may be required by other KUMA resources and services.

- Proxies—resources of this type contain settings for using proxy servers.

- Secrets—resources of this type are used to securely store confidential information (such as credentials) that KUMA needs to interact with external services.

When you click on a resource type, a window opens displaying a table with the available resources of this type. The resource table contains the following columns:

- Name—the name of a resource. Can be used to search for resources and sort them.

- Updated—the date and time of the last update of a resource. Can be used to sort resources.

- Created by—the name of the user who created a resource.

- Description—the description of a resource.

The maximum table size is not limited. If you want to select all resources, scroll to the end of the table and select the Select all check box, which selects all available resources in the table.

Resources can be organized into folders. The folder structure is displayed in the left part of the window: root folders correspond to tenants and contain a list of all resources of the tenant. All other folders nested within the root folder display the resources of an individual folder. When a folder is selected, the resources it contains are displayed as a table in the right pane of the window.

Resources can be created, edited, copied, moved from one folder to another, and deleted. Resources can also be exported and imported.

KUMA comes with a set of predefined resources, which can be identified by the "[OOTB]<resource_name>" name. OOTB resources are protected from editing.

If you want to adapt a predefined OOTB resource to your organization's infrastructure:

- In the Resources-<resource type> section, select the OOTB resource that you want to edit.

- In the upper part of the KUMA web interface, click Duplicate, then click Save.

- A new resource named "[OOTB]<resource_name> - copy" is displayed in the web interface.

- Edit the copy of the predefined resource as necessary and save your changes.

The adapted resource is available for use.

Operations with resources

To manage KUMA resources, you can create, move, copy, edit, delete, import, and export them. These operations are available for all resources, regardless of the resource type.

KUMA resources reside in folders. You can add, rename, move, or delete resource folders.

Creating, renaming, moving, and deleting resource folders

Resources can be organized into folders. The folder structure is displayed in the left part of the window: root folders correspond to tenants and contain a list of all resources of the tenant. All other folders nested within the root folder display the resources of an individual folder. When a folder is selected, the resources it contains are displayed as a table in the right pane of the window.

You can create, rename, move and delete folders.

To create a folder:

- Select the folder in the tree where the new folder is required.

- Click the Add folder button.

The folder will be created.

To rename a folder:

- Locate required folder in the folder structure.

- Hover over the name of the folder.

The

icon will appear near the name of the folder.

icon will appear near the name of the folder. - Open the

drop-down list and select Rename.

drop-down list and select Rename.The folder name will become active for editing.

- Enter the new folder name and press ENTER.

The folder name cannot be empty.

The folder will be renamed.

To move a folder,

Drag and drop the folder to a required place in folder structure by clicking its name.

Folders cannot be dragged from one tenant to another.

To delete a folder:

- Locate required folder in the folder structure.

- Hover over the name of the folder.

The

icon will appear near the name of the folder.

icon will appear near the name of the folder. - Open the

drop-down list and select Delete.

drop-down list and select Delete.The conformation window appears.

- Click OK.

The folder will be deleted.

The program does not delete folders that contain files or subfolders.

Page topCreating, duplicating, moving, editing, and deleting resources

You can create, move, copy, edit, and delete resources.

To create the resource:

- In the Resources → <resource type> section, select or create a folder where you want to add the new resource.

Root folders correspond to tenants. For a resource to be available to a specific tenant, it must be created in the folder of that tenant.

- Click the Add <resource type> button.

The window for configuring the selected resource type opens. The available configuration parameters depend on the resource type.

- Enter a unique resource name in the Name field.

- Specify the required parameters (marked with a red asterisk).

- If necessary, specify the optional parameters (not required).

- Click Save.

The resource will be created and available for use in services and other resources.

To move the resource to a new folder:

- In the Resources → <resource type> section, find the required resource in the folder structure.

- Select the check box near the resource you want to move. You can select multiple resources.

The

icon appears near the selected resources.

icon appears near the selected resources. - Use the

icon to drag and drop resources to the required folder.

icon to drag and drop resources to the required folder.

The resources will be moved to the new folders.

You can only move resources to folders of the tenant in which the resources were created. Resources cannot be moved to another tenant's folders.

To copy the resource:

- In the Resources → <resource type> section, find the required resource in the folder structure.

- Select the check box next to the resource that you want to copy and click Duplicate.

A window opens with the settings of the resource that you have selected for copying. The available configuration parameters depend on the resource type.

The

<selected resource name> - copyvalue is displayed in the Name field. - Make the necessary changes to the parameters.

- Enter a unique name in the Name field.

- Click Save.

The copy of the resource will be created.

To edit the resource:

- In the Resources → <resource type> section, find the required resource in the folder structure.

- Select the resource.

A window with the settings of the selected resource opens. The available configuration parameters depend on the resource type.

- Make the necessary changes to the parameters.

- Click Save.

The resource will be updated. If this resource is used in a service, restart the service to apply the new settings.

To delete the resource:

- In the Resources → <resource type> section, find the required resource in the folder structure.

- Select the check box next to the resource that you want to delete and click Delete.

A confirmation window opens.

- Click OK.

The resource will be deleted.

Page topUpdating resources

Kaspersky regularly releases packages with resources that can be imported from the repository. You can specify an email address in the settings of the Repository update task. After the first execution of the task, KUMA starts sending notifications about the packages available for update to the specified address. You can update the repository, analyze the contents of each update, and decide if to import and deploy the new resources in the operating infrastructure. KUMA supports updates from Kaspersky servers and from custom sources, including offline update using the update mirror mechanism. If you have other Kaspersky products in the infrastructure, you can connect KUMA to existing update mirrors. The update subsystem expands KUMA capabilities to respond to the changes in the threat landscape and the infrastructure. The capability to use it without direct Internet access ensures the privacy of the data processed by the system.

To update resources, perform the following steps:

- Update the repository to deliver the resource packages to the repository. The repository update is available in two modes:

- Automatic update

- Manual update

- Import the resource packages from the updated repository into the tenant.

For the service to start using the resources, make sure that the updated resources are mapped after performing the import. If necessary, link the resources to collectors, correlators, or agents, and update the settings.

To enable automatic update:

- In the Settings → Repository update section, configure the Data refresh interval in hours. The default value is 24 hours.

- Specify the Update source. The following options are available:

- .

You can view the list of servers in the Knowledge Base, article 15998.

- Custom source:

- The URL to the shared folder on the HTTP server.

- The full path to the local folder on the host where the KUMA Core is installed.

If a local folder is used, the kuma system user must have read access to this folder and its contents.

- .

- Specify the Emails for notification by clicking the Add button. The notifications that new packages or new versions of the packages imported into the tenant are available in the repository are sent to the specified email addresses.

If you specify the email address of a KUMA user, the Receive email notifications check box must be selected in the user profile. For emails that do not belong to any KUMA user, the messages are received without additional settings. The settings for connecting to the SMTP server must be specified in all cases.

- Click Save. The update task starts shortly. Then the task restarts according to the schedule.

To manually start the repository update:

- To disable automatic updates, in the Settings → Repository update section, select the Disable automatic update check box. This check box is cleared by default. You can also start a manual repository update without disabling automatic update. Starting an update manually does not affect the automatic update schedule.

- Specify the Update source. The following options are available:

- Kaspersky update servers.

- Custom source:

- The URL to the shared folder on the HTTP server.

- The full path to the local folder on the host with the KUMA Core

If a local folder is used, the kuma user must have access to this folder and its contents.

- Specify the Emails for notification by clicking the Add button. The notifications that new packages or new versions of the packages imported into the tenant are available in the repository are sent to the specified email addresses.

If you specify the email address of a KUMA user, the Receive email notifications check box must be selected in the user profile. For emails that do not belong to any KUMA user, the messages are received without additional settings. The settings for connecting to the SMTP server must be specified in all cases.

- Click Run update. Thus, you simultaneously save the settings and manually start the Repository update task.

Configuring a custom source using Kaspersky Update Utility

You can update resources without Internet access by using a custom update source via the Kaspersky Update Utility.

Configuration consists of the following steps:

- Configuring a custom source using Kaspersky Update Utility:

- Installing and configuring Kaspersky Update Utility on one of the computers in the corporate LAN.

- Configuring copying of updates to a shared folder in Kaspersky Update Utility settings.

- Configuring update of the KUMA repository from a custom source.

Configuring a custom source using Kaspersky Update Utility:

You can download the Kaspersky Update Utility distribution kit from the Kaspersky Technical Support website.

- In Kaspersky Update Utility, enable the download of updates for KUMA 2.1:

- Under Applications – Perimeter control, select the check box next to KUMA 2.1 to enable the update capability.

- If you work with Kaspersky Update Utility using the command line, add the following line to the [ComponentSettings] section of the updater.ini configuration file or specify the

truevalue for an existing line:KasperskyUnifiedMonitoringAndAnalysisPlatform_2_1=true

- In the Downloads section, specify the update source. By default, Kaspersky update servers are used as the update source.

- In the Downloads section, in the Update folders group of settings, specify the shared folder for Kaspersky Update Utility to download updates to. The following options are available:

- Specify the local folder on the host where Kaspersky Update Utility is installed. Deploy the HTTP server for distributing updates and publish the local folder on it. In KUMA, in the Settings → Repository update → Custom source section, specify the URL of the local folder published on the HTTP server.

- Specify the local folder on the host where Kaspersky Update Utility is installed. Make this local folder available over the network. Mount the network-accessible local folder on the host where KUMA is installed. In KUMA, in the Settings → Repository update → Custom source section, specify the full path to the local folder.

For detailed information about working with Kaspersky Update Utility, refer to the Kaspersky Knowledge Base.

Page topExporting resources

If shared resources are hidden for a user, the user cannot export shared resources or resources that use shared resources.

To export resources:

- In the Resources section, click Export resources.

The Export resources window opens with the tree of all available resources.

- In the Password field enter the password that must be used to protect exported data.

- In the Tenant drop-down list, select the tenant whose resources you want to export.

- Check boxes near the resources you want to export.

If selected resources are linked to other resources, linked resources will be exported, too.

- Click the Export button.

The resources in a password-protected file are saved on your computer using your browser settings. The Secret resources are exported blank.

Page topImporting resources

To import resources:

- In the Resources section, click Import resources.

The Resource import window opens.

- In the Tenant drop-down list, select the tenant to assign the imported resources to.

- In the Import source drop-down list, select one of the following options:

- File

If you select this option, enter the password and click the Import button.

- Repository

If you select this option, a list of packages available for import is displayed. We recommend starting the import with the "OOTB resources for KUMA 2.1" package and then importing the packages one by one. If you receive a "Database error" error when importing packages, try importing the package mentioned in the error message again, selecting only the specified package for import. You can also configure automatic updates.

You can select one or more packages to import and click the Import button.

The imported resources can only be deleted. To rename, edit or move an imported resource, make a copy of the resource using the Duplicate button and perform the desired actions with the resource copy. When importing future versions of the package, the duplicate is not updated because it is a separate object.

- File

- Resolve the conflicts between the resources imported from the file and the existing resources if they occur. Read more about resource conflicts below.

- If the name, type, and guid of an imported resource fully match the name, type, and guid of an existing resource, the Conflicts window opens with the table displaying the type and the name of the conflicting resources. Resolve displayed conflicts:

- To replace the existing resource with a new one, click Replace.

To replace all conflicting resources, click Replace all.

- To leave the existing resource, click Skip.

To keep all existing resources, click Skip all.

- To replace the existing resource with a new one, click Replace.

- Click the Resolve button.

The resources are imported to KUMA. The Secret resources are imported blank.

- If the name, type, and guid of an imported resource fully match the name, type, and guid of an existing resource, the Conflicts window opens with the table displaying the type and the name of the conflicting resources. Resolve displayed conflicts:

About conflict resolving

When resources are imported into KUMA from a file, they are compared with existing resources; the following parameters are compared:

- Name and kind. If an imported resource's name and kind parameters match those of the existing one, the imported resource's name is automatically changed.

- ID. If identifiers of two resources match, a conflict appears that must be resolved by the user. This could happen when you import resources to the same KUMA server from which they were exported.

When resolving a conflict you can choose either to replace existing resource with the imported one or to keep exiting resource, skipping the imported one.

Some resources are linked: for example, in some types of connectors, the connector secret must be specified. The secrets are also imported if they are linked to a connector. Such linked resources are exported and imported together.

Special considerations of import:

- Resources are imported to the selected tenant.

- Starting with version 2.1.3, if a linked resource is in the Shared tenant, it ends up in the Shared tenant when imported.

- In version 2.1.3 and later, in the Conflicts window, the Parent column always displays the top-most parent resource among those that were selected during import.

- In version 2.1.3 and later, if a conflict occurs during import and you choose to replace existing resource with a new one, it would mean that all the other resources linked to the one being replaced are automatically replaced with the imported resources.

Known bugs in version 2.1.3:

- The linked resource ends up in the tenant specified during the import, and not in the Shared tenant, as indicated in the Conflicts window, under the following conditions:

- The associated resource is initially in the Shared tenant.

- In the Conflicts window, you select Skip for all parent objects of the linked resource from the Shared tenant.

- You leave the linked resource from the Shared tenant for replacement.

- After importing, the categories do not have a tenant specified in the filter under the following conditions:

- The filter contains linked asset categories from different tenants.

- Asset category names are the same.

- You are importing this filter with linked asset categories to a new server.

- In Tenant 1, the name of the asset category is duplicated under the following conditions:

- in Tenant 1, you have a filter with linked asset categories from Tenant 1 and the Shared tenant.

- The names of the linked asset categories are the same.

- You are importing such a filter from Tenant 1 to the Shared tenant.

- You cannot import conflicting resources into the same tenant.

The error "Unable to import conflicting resources into the same tenant" means that the imported package contains conflicting resources from different tenants and cannot be imported into the Shared tenant.

Solution: Select a tenant other than Shared to import the package. In this case, during the import, resources originally located in the Shared tenant are imported into the Shared tenant, and resources from the other tenant are imported into the tenant selected during import.

- Only the general administrator can import categories into the Shared tenant.

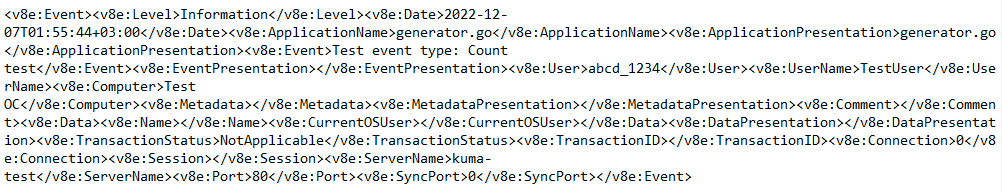

The error "Only the general administrator can import categories into the Shared tenant" means that the imported package contains resources with linked shared asset categories. You can see the categories or resources with linked shared asset categories in the KUMA Core log. Path to the Core log:

/opt/kaspersky/kuma/core/log/core

Solution. Choose one of the following options:

- Do not import resources to which shared categories are linked: clear the check boxes next to the relevant resources.

- Perform the import under a General administrator account.

- Only the general administrator can import resources into the Shared tenant.

The error "Only the general administrator can import resources into the Shared tenant" means that the imported package contains resources with linked shared resources. You can see the resources with linked shared resources in the KUMA Core log. Path to the Core log:

/opt/kaspersky/kuma/core/log/core

Solution. Choose one of the following options:

- Do not import resources that have linked resources from the Shared tenant, and the shared resources themselves: clear the check boxes next to the relevant resources.

- Perform the import under a General administrator account.

Destinations

Destinations define network settings for sending normalized events. Collectors and correlators use destinations to describe where to send processed events. Typically, the destination points are the correlator and storage.

The settings of destinations are configured on two tabs: Basic settings and Advanced settings. The available settings depend on the selected type of destination:

- nats-jetstream—used for NATS communications.

- tcp—used for communications over TCP.

- http—used for HTTP communications.

- diode—used to transmit events using a data diode.

- kafka—used for Kafka communications.

- file—used for writing to a file.

- storage—used to transmit data to the storage.

- correlator—used to transmit data to the correlator.

Nats type

The nats-jetstream type is used for NATS communications.

Basic settings tab

Setting |

Description |

|---|---|

Name |

Required setting. Unique name of the resource. Must contain 1 to 128 Unicode characters. |

Tenant |

Required setting. The name of the tenant that owns the resource. |

Disabled switch |

Used when events must not be sent to the destination. By default, sending events is enabled. |

Type |

Required setting. Destination type, nats-jetstream. |

URL |

Required setting. URL that you want to connect to. |

Topic |

Required setting. The topic of NATS messages. Must contain Unicode characters. |

Delimiter |

Specify a character that defines where one event ends and the other begins. By default, |

Authorization |

Type of authorization when connecting to the specified URL Possible values:

|

Description |

Resource description: up to 4,000 Unicode characters. |

Advanced settings tab

Setting |

Description |

|---|---|

Compression |

You can use Snappy compression. By default, compression is disabled. |

Buffer size |

Sets the size of the buffer. The default value is 1 KB, and the maximum value is 64 MB. |

Timeout |

The time (in seconds) to wait for a response from another service or component. The default value is |

Disk buffer size limit |

Size of the disk buffer in bytes. The default value is 10 GB. |

Cluster ID |

ID of the NATS cluster. |

TLS mode |

Use of TLS encryption. Available values:

|

Delimiter |

In the drop-down list, you can select the character to mark the boundary between events. By default, |

Buffer flush interval |

Time (in seconds) between sending batches of data to the destination. The default value is |

Workers |

This field is used to set the number of services processing the queue. By default, this value is equal to the number of vCPUs of the KUMA Core server. |

Debug |

A drop-down list in which you can specify whether resource logging must be enabled. The default value is Disabled. |

Disk buffer disabled |

Drop-down list that lets you enable or disable the disk buffer. By default, the disk buffer is enabled. The disk buffer is used if the collector cannot send normalized events to the destination. The amount of allocated disk space is limited by the value of the Disk buffer size limit setting. If the disk space allocated for the disk buffer is exhausted, events are rotated as follows: new events replace the oldest events written to the buffer. |

Filter |

In the Filter section, you can specify the criteria for identifying events that must be processed by the resource. You can select an existing filter from the drop-down list or create a new filter. |

Tcp type

The tcp type is used for TCP communications.

Basic settings tab

Setting |

Description |

|---|---|

Name |

Required setting. Unique name of the resource. Must contain 1 to 128 Unicode characters. |

Tenant |

Required setting. The name of the tenant that owns the resource. |

Disabled switch |

Used when events must not be sent to the destination. By default, sending events is enabled. |

Type |

Required setting. Destination type, tcp. |

URL |

Required setting. URL that you want to connect to. Available formats: IPv6 addresses are also supported. When using IPv6 addresses, you must also specify the interface in the For example, |

Description |

Resource description: up to 4,000 Unicode characters. |

Advanced settings tab

Setting |

Description |

|---|---|

Compression |

You can use Snappy compression. By default, compression is disabled. |

Buffer size |

Sets the size of the buffer. The default value is 1 KB, and the maximum value is 64 MB. |

Timeout |

The time (in seconds) to wait for a response from another service or component. The default value is |

Disk buffer size limit |

Size of the disk buffer in bytes. The default value is 10 GB. |

TLS mode |

TLS encryption mode using certificates in pem x509 format. Available values:

When using TLS, it is impossible to specify an IP address as a URL. |

Delimiter |

In the drop-down list, you can select the character to mark the boundary between events. By default, |

Buffer flush interval |

Time (in seconds) between sending batches of data to the destination. The default value is |

Workers |

This field is used to set the number of services processing the queue. By default, this value is equal to the number of vCPUs of the KUMA Core server. |

Debug |

A drop-down list in which you can specify whether resource logging must be enabled. The default value is Disabled. |

Disk buffer disabled |

Drop-down list that lets you enable or disable the disk buffer. By default, the disk buffer is enabled. The disk buffer is used if the collector cannot send normalized events to the destination. The amount of allocated disk space is limited by the value of the Disk buffer size limit setting. If the disk space allocated for the disk buffer is exhausted, events are rotated as follows: new events replace the oldest events written to the buffer. |

Filter |

In this section, you can specify the criteria for identifying events that must be processed by the resource. You can select an existing filter from the drop-down list or create a new filter. |

Http type

The http type is used for HTTP communications.

Basic settings tab

Setting |

Description |

|---|---|

Name |

Required setting. Unique name of the resource. Must contain 1 to 128 Unicode characters. |

Tenant |

Required setting. The name of the tenant that owns the resource. |

Disabled switch |

Used when events must not be sent to the destination. By default, sending events is enabled. |

Type |

Required setting. Destination type, http. |

URL |

Required setting. URL that you want to connect to. Available formats: IPv6 addresses are also supported, however, when you use them, you must specify the interface as well: |

Authorization |

Type of authorization when connecting to the specified URL Possible values:

|

Description |

Resource description: up to 4,000 Unicode characters. |

Advanced settings tab

Setting |

Description |

|---|---|

Compression |

You can use Snappy compression. By default, compression is disabled. |

Buffer size |

Sets the size of the buffer. The default value is 1 KB, and the maximum value is 64 MB. |

Timeout |

The time (in seconds) to wait for a response from another service or component. The default value is |

Disk buffer size limit |

Size of the disk buffer in bytes. The default value is 10 GB. |

TLS mode |

Use of TLS encryption. Available values:

|

URL selection policy |

From the drop-down list, you can select the method of deciding which URL to send events to if multiple URLs are specified. Available values:

|

Delimiter |

In the drop-down list, you can select the character to mark the boundary between events. By default, \n is used. |

Path |

The path that must be added for the URL request. For example, if you specify the path |

Buffer flush interval |

Time (in seconds) between sending batches of data to the destination. The default value is |

Workers |

The number of services that are processing the queue. By default, this value is equal to the number of vCPUs of the KUMA Core server. |

Health check path |

The URL for sending requests to obtain health information about the system that the destination resource is connecting to. |

Health check timeout |

Frequency of the health check in seconds. |

Health Check Disabled |

Check box that disables the health check. |

Debug |

A drop-down list in which you can specify whether resource logging must be enabled. The default value is Disabled. |

Disk buffer disabled |

Drop-down list that lets you enable or disable the disk buffer. By default, the disk buffer is enabled. The disk buffer is used if the collector cannot send normalized events to the destination. The amount of allocated disk space is limited by the value of the Disk buffer size limit setting. If the disk space allocated for the disk buffer is exhausted, events are rotated as follows: new events replace the oldest events written to the buffer. |

Filter |

In the Filter section, you can specify the criteria for identifying events that must be processed by the resource. You can select an existing filter from the drop-down list or create a new filter. |

Diode type

The diode type is used to transmit events using a data diode.

Basic settings tab

Setting |

Description |

|---|---|

Name |

Required setting. Unique name of the resource. Must contain 1 to 128 Unicode characters. |

Tenant |

Required setting. The name of the tenant that owns the resource. |

Disabled switch |

Used when events must not be sent to the destination. By default, sending events is enabled. |

Type |

Required setting. Destination type, diode. |

Data diode source directory |

Required setting. The directory from which the data diode moves events. The path can contain up to 255 Unicode characters. |

Temporary directory |

Directory in which events are prepared for transmission to the data diode. Events are collected in a file when a timeout (10 seconds by default) or a buffer overflow occurs. The prepared file is moved to the directory specified in the Data diode source directory field. The checksum (SHA-256) of the file contents is used as the name of the file containing events. The temporary directory must be different from the data diode source directory. |

Description |

Resource description: up to 4,000 Unicode characters. |

Advanced settings tab

Setting |

Description |

|---|---|

Compression |

You can use Snappy compression. By default, compression is disabled. This setting must match for the connector and destination resources used to relay events from an isolated network segment via the data diode. |

Buffer size |

Sets the size of the buffer. The default value is 1 KB, and the maximum value is 64 MB. |

Delimiter |

In the drop-down list, you can select the character to mark the boundary between events. By default, This setting must match for the connector and destination resources used to relay events from an isolated network segment via the data diode. |

Buffer flush interval |

Time (in seconds) between sending batches of data to the destination. The default value is |

Workers |

This field is used to set the number of services processing the queue. By default, this value is equal to the number of vCPUs of the KUMA Core server. |

Debug |

A drop-down list in which you can specify whether resource logging must be enabled. The default value is Disabled. |

Filter |

In the Filter section, you can specify the criteria for identifying events that must be processed by the resource. You can select an existing filter from the drop-down list or create a new filter. |

Kafka type

The kafka type is used for Kafka communications.

Basic settings tab

Setting |

Description |

|---|---|

Name |

Required setting. Unique name of the resource. Must contain 1 to 128 Unicode characters. |

Tenant |

Required setting. The name of the tenant that owns the resource. |

Disabled switch |

Used when events must not be sent to the destination. By default, sending events is enabled. |

Type |

Required setting. Destination type, kafka. |

URL |

Required setting. URL that you want to connect to. Available formats: You can add multiple addresses using the URL button. |

Topic |

Required setting. Subject of Kafka messages. Must contain from 1 to 255 of the following characters: a–z, A–Z, 0–9, ".", "_", "-". |

Delimiter |

Specify a character that defines where one event ends and the other begins. By default, |

Authorization |

Type of authorization when connecting to the specified URL Possible values:

|

Description |

Resource description: up to 4,000 Unicode characters. |

Advanced settings tab

Setting |

Description |

|---|---|

Buffer size |

Sets the size of the buffer. The default value is 1 KB, and the maximum value is 64 MB. |

Timeout |

The time (in seconds) to wait for a response from another service or component. The default value is |

Disk buffer size limit |

Size of the disk buffer in bytes. The default value is 10 GB. |

TLS mode |

Use of TLS encryption. Available values:

|

Delimiter |

In the drop-down list, you can select the character to mark the boundary between events. By default, |

Buffer flush interval |

Time (in seconds) between sending batches of data to the destination. The default value is |

Workers |

This field is used to set the number of services processing the queue. By default, this value is equal to the number of vCPUs of the KUMA Core server. |

Debug |

A drop-down list in which you can specify whether resource logging must be enabled. The default value is Disabled. |

Disk buffer disabled |

Drop-down list that lets you enable or disable the disk buffer. By default, the disk buffer is enabled. The disk buffer is used if the collector cannot send normalized events to the destination. The amount of allocated disk space is limited by the value of the Disk buffer size limit setting. If the disk space allocated for the disk buffer is exhausted, events are rotated as follows: new events replace the oldest events written to the buffer. |

Filter |

In this section, you can specify the criteria for identifying events that must be processed by the resource. You can select an existing filter from the drop-down list or create a new filter. |

File type

The file type is used for writing data to a file.

If you delete a destination of the 'file' type used in a service, that service must be restarted.

Basic settings tab

Setting |

Description |

|---|---|

Name |

Required setting. Unique name of the resource. Must contain 1 to 128 Unicode characters. |

Tenant |

Required setting. The name of the tenant that owns the resource. |

Disabled switch |

Used when events must not be sent to the destination. By default, sending events is enabled. |

Type |

Required setting. Destination type, file. |

URL |

Required setting. Path to the file to which the events must be written. |

Description |

Resource description: up to 4,000 Unicode characters. |

Advanced settings tab

Setting |

Description |

|---|---|

Buffer size |

Sets the size of the buffer. The default value is 1 KB, and the maximum value is 64 MB. |

Disk buffer size limit |

Size of the disk buffer in bytes. The default value is 10 GB. |

Delimiter |

In the drop-down list, you can select the character to mark the boundary between events. \n is used by default. |

Buffer flush interval |

Time (in seconds) between sending batches of data to the destination. The default value is |

Number of handlers |

This field is used to set the number of services processing the queue. By default, this value is equal to the number of vCPUs of the KUMA Core server. |

Debug |

A drop-down list in which you can specify whether resource logging must be enabled. The default value is Disabled. |

Disk buffer disabled |

Drop-down list that lets you enable or disable the disk buffer. By default, the disk buffer is enabled. The disk buffer is used if the collector cannot send normalized events to the destination. The amount of allocated disk space is limited by the value of the Disk buffer size limit setting. If the disk space allocated for the disk buffer is exhausted, events are rotated as follows: new events replace the oldest events written to the buffer. |

Filter |

In the Filter section, you can specify the criteria for identifying events that must be processed by the resource. You can select an existing filter from the drop-down list or create a new filter. |

Storage type

The storage type is used to transmit data to the storage.

Basic settings tab

Setting |

Description |

|---|---|

Name |

Required setting. Unique name of the resource. Must contain 1 to 128 Unicode characters. |

Tenant |

Required setting. The name of the tenant that owns the resource. |

Disabled switch |

Used when events must not be sent to the destination. By default, sending events is enabled. |

Type |

Required setting. Destination type, storage. |

URL |

Required setting. URL that you want to connect to. Available formats: You can add multiple addresses using the URL button. The URL field supports search for services by FQDN, IP address, and name. Search string formats:

|

Description |

Resource description: up to 4,000 Unicode characters. |

Advanced settings tab

Setting |

Description |

|---|---|

Proxy server |

Drop-down list for selecting a proxy server. |

Buffer size |

Sets the size of the buffer. The default value is 1 KB, and the maximum value is 64 MB. |

Timeout |

The time (in seconds) to wait for a response from another service or component. The default value is |

Disk buffer size limit |

Size of the disk buffer in bytes. The default value is 10 GB. |

URL selection policy |

Drop-down list in which you can select a method for determining which URL to send events to if several URLs have been specified:

|

Buffer flush interval |

Time (in seconds) between sending batches of data to the destination. The default value is |

Workers |

This field is used to set the number of services processing the queue. By default, this value is equal to the number of vCPUs of the KUMA Core server. |

Health check timeout |

Frequency of the health check in seconds. |

Debug |

A drop-down list in which you can specify whether resource logging must be enabled. The default value is Disabled. |

Disk buffer disabled |

Drop-down list that lets you enable or disable the disk buffer. By default, the disk buffer is enabled. The disk buffer is used if the collector cannot send normalized events to the destination. The amount of allocated disk space is limited by the value of the Disk buffer size limit setting. If the disk space allocated for the disk buffer is exhausted, events are rotated as follows: new events replace the oldest events written to the buffer. |

Filter |

In this section, you can specify the criteria for identifying events that must be processed by the resource. You can select an existing filter from the drop-down list or create a new filter. |

Correlator type

The correlator type is used to transmit data to the correlator.

Basic settings tab

Setting |

Description |

|---|---|

Name |

Required setting. Unique name of the resource. Must contain 1 to 128 Unicode characters. |

Tenant |

Required setting. The name of the tenant that owns the resource. |

Disabled switch |

Used when events must not be sent to the destination. By default, sending events is enabled. |

Type |

Required setting. Destination type, correlator. |

URL |

Required setting. URL that you want to connect to. Available formats: You can add multiple addresses using the URL button. The URL field supports search for services by FQDN, IP address, and name. Search string formats:

|

Description |

Resource description: up to 4,000 Unicode characters. |

Advanced settings tab

Setting |

Description |

|---|---|

Proxy server |

Drop-down list for selecting a proxy server. |

Buffer size |

Sets the size of the buffer. The default value is 1 KB, and the maximum value is 64 MB. |

Timeout |

The time (in seconds) to wait for a response from another service or component. The default value is |

Disk buffer size limit |

Size of the disk buffer in bytes. The default value is 10 GB. |

URL selection policy |

Drop-down list in which you can select a method for determining which URL to send events to if several URLs have been specified:

|

Buffer flush interval |

Time (in seconds) between sending batches of data to the destination. The default value is |

Workers |

This field is used to set the number of services processing the queue. By default, this value is equal to the number of vCPUs of the KUMA Core server. |

Health check timeout |

Frequency of the health check in seconds. |

Debug |

A drop-down list in which you can specify whether resource logging must be enabled. The default value is Disabled. |

Disk buffer disabled |

Drop-down list that lets you enable or disable the disk buffer. By default, the disk buffer is enabled. The disk buffer is used if the collector cannot send normalized events to the destination. The amount of allocated disk space is limited by the value of the Disk buffer size limit setting. If the disk space allocated for the disk buffer is exhausted, events are rotated as follows: new events replace the oldest events written to the buffer. |

Filter |

In the Filter section, you can specify the criteria for identifying events that must be processed by the resource. You can select an existing filter from the drop-down list or create a new filter. |

Predefined destinations

Destinations listed in the table below are included in the KUMA distribution kit.

Predefined destinations

Destination name |

Description |

[OOTB] Correlator |

Sends events to a correlator. |

[OOTB] Storage |

Sends events to storage. |

Working with events

In the Events section of the KUMA web interface, you can inspect events received by the program to investigate security threats or create correlation rules. The events table displays the data received after the SQL query is executed.

Events can be sent to the correlator for a retroscan.

The event date format depends on the localization language selected in the application settings. Possible date format options:

- English localization: YYYY-MM-DD.

- Russian localization: DD.MM.YYYY.

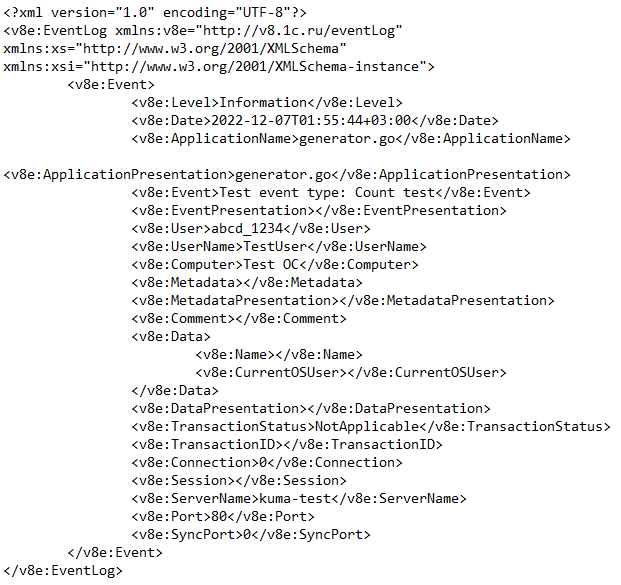

Filtering and searching events

The Events section of the KUMA web interface does not show any data by default. To view events, you need to define an SQL query in the search field and click the  button. The SQL query can be entered manually or it can be generated using a query builder.

button. The SQL query can be entered manually or it can be generated using a query builder.

Data aggregation and grouping is supported in SQL queries.

You can add filter conditions to an already generated SQL query in the window for viewing statistics, the events table, and the event details area:

- Changing a query from the Statistics window

- Changing a query from the events table

- Changing a query from the Event details area

After modifying a query, all query parameters, including the added filter conditions, are transferred to the query builder and the search field.

When you switch to the query builder, the parameters of a query entered manually in the search field are not transferred to the builder, so you will need to create your query again. Also, the query created in the builder does not overwrite the query that was entered into the search string until you click the Apply button in the builder window.

In the SQL query input field, you can enable the display of control characters.

You can also filter events by time period. Search results can be automatically updated.

The filter configuration can be saved. Existing filter configurations can be deleted.

Filter functions are available for users regardless of their roles.

When accessing certain event fields with IDs, KUMA returns the corresponding names.

For more details on SQL, refer to the ClickHouse documentation. See also KUMA operator usage and supported functions.

Selecting Storage

Events that are displayed in the Events section of the KUMA web interface are retrieved from storage (from the ClickHouse cluster). Depending on the demands of your company, you may have more than one Storage. However, you can only receive events from one Storage at a time, so you must specify which one you want to use.

To select the Storage you want to receive events from,

In the Events section of the KUMA web interface, open the  drop-down list and select the relevant storage cluster.

drop-down list and select the relevant storage cluster.

Now events from the selected storage are displayed in the events table. The name of the selected storage is displayed in the  drop-down list.

drop-down list.

The  drop-down list displays only the clusters of tenants available to the user, and the cluster of the main tenant.

drop-down list displays only the clusters of tenants available to the user, and the cluster of the main tenant.

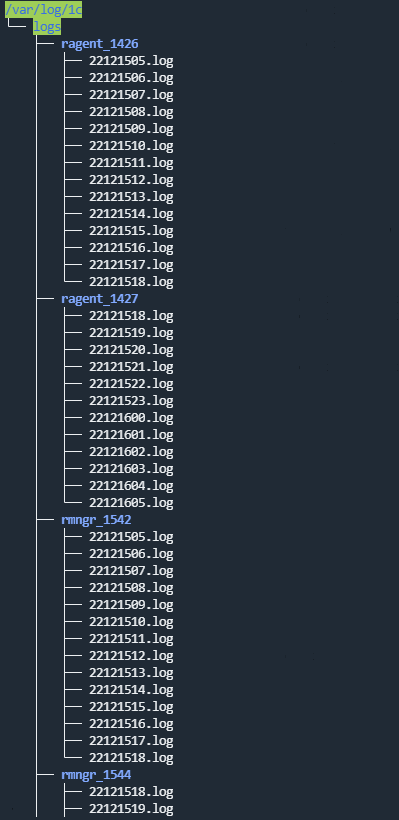

Generating an SQL query using a builder

In KUMA, you can use a query builder to generate an SQL query for filtering events.

To generate an SQL query using a builder:

- In the Events section of the KUMA web interface, click the

button.

button.The filter constructor window opens.

- Generate a search query by providing data in the following parameter blocks:

SELECT—event fields that should be returned. The * value is selected by default, which means that all available event fields must be returned. To make viewing the search results easier, select the necessary fields in the drop-down list. In this case, the data only for the selected fields is displayed in the table. Note that Select * increases the duration of the request execution, but eliminates the need to manually indicate the fields in the request.

When selecting an event field, you can use the field on the right of the drop-down list to specify an alias for the column of displayed data, and you can use the right-most drop-down list to select the operation to perform on the data: count, max, min, avg, sum.

If you are using aggregation functions in a query, you cannot customize the events table display, sort events in ascending or descending order, or receive statistics.

When filtering by alert-related events in alert investigation mode, you cannot perform operations on the data of event fields or assign names to the columns of displayed data.

- FROM—data source. Select the events value.

- WHERE—conditions for filtering events.

Conditions and groups of conditions can be added by using the Add condition and Add group buttons. The AND operator value is selected by default in a group of conditions, but the operator can be changed by clicking on this value. Available values: AND, OR, NOT. The structure of conditions and condition groups can be changed by using the

icon to drag and drop expressions.

icon to drag and drop expressions.Adding filter conditions:

- In the drop-down list on the left, select the event field that you want to use for filtering.

- Select the necessary operator from the middle drop-down list. The available operators depend on the type of value of the selected event field.

- Enter the value of the condition. Depending on the selected type of field, you may have to manually enter the value, select it from the drop-down list, or select it on the calendar.

Filter conditions can be deleted by using the

button. Group conditions are deleted using the Delete group button.

button. Group conditions are deleted using the Delete group button. - GROUP BY—event fields or aliases to be used for grouping the returned data.

If you are using data grouping in a query, you cannot customize the events table display, sort events in ascending or descending order, receive statistics, or perform a retroscan.

When filtering by alert-related events in alert investigation mode, you cannot group the returned data.

- ORDER BY—columns used as the basis for sorting the returned data. In the drop-down list on the right, you can select the necessary order: DESC—descending, ASC—ascending.

- LIMIT—number of strings displayed in the table.

The default value is 250.

If you are filtering events by user-defined period and the number of strings in the search results exceeds the defined value, you can click the Show next records button to display additional strings in the table. This button is not displayed when filtering events by the standard period.

- Click Apply.

The current SQL query will be overwritten. The generated SQL query is displayed in the search field.

If you want to reset the builder settings, click the Default query button.

If you want to close the builder without overwriting the existing query, click the

button.

button. - Click the

button to display the data in the table.

button to display the data in the table.

The table will display the search results based on the generated SQL query.

When switching to another section of the web interface, the query generated in the builder is not preserved. If you return to the Events section from another section, the builder will display the default query.

For more details on SQL, refer to the ClickHouse documentation. See also KUMA operator usage and supported functions.

Manually creating an SQL query

You can use the search string to manually create SQL queries of any complexity for filtering events.

To manually generate an SQL query:

- Go to the Events section of the KUMA web interface.

An input form opens.

- Enter your SQL query into the input field.

- Click the

button.

button.

You will see a table of events that satisfy the criteria of your query. If necessary, you can filter events by period.

Supported functions and operators

SELECT—event fields that should be returned.For

SELECTfields, the program supports the following functions and operators:- Aggregation functions:

count, avg, max, min, sum. - Arithmetic operators:

+, -, *, /, <, >, =, !=, >=, <=.You can combine these functions and operators.

If you are using aggregation functions in a query, you cannot customize the events table display, sort events in ascending or descending order, or receive statistics.

- Aggregation functions:

DISTINCT–removes duplicates from the result of a SELECT statement. You must use the following notation: SELECT DISTINCT SourceAddress as Addresses FROM <rest of the query>.FROM—data source.When creating a query, you need to specify the events value as the data source.

WHERE—conditions for filtering events.AND, OR, NOT, =, !=, >, >=, <, <=INBETWEENLIKEILIKEinSubnetmatch(the re2 syntax of regular expressions is used in queries, special characters must be shielded with "\")

GROUP BY—event fields or aliases to be used for grouping the returned data.If you are using data grouping in a query, you cannot customize the events table display, sort events in ascending or descending order, receive statistics, or perform a retroscan.

ORDER BY—columns used as the basis for sorting the returned data.Possible values:

DESC—descending order.ASC—ascending order.

OFFSET—skip the indicated number of lines before printing the query results output.LIMIT—number of strings displayed in the table.The default value is 250.

If you are filtering events by user-defined period and the number of strings in the search results exceeds the defined value, you can click the Show next records button to display additional strings in the table. This button is not displayed when filtering events by the standard period.

Example queries:

SELECT * FROM `events` WHERE Type IN ('Base', 'Audit') ORDER BY Timestamp DESC LIMIT 250In the events table, all events with the Base and Audit type are sorted by the Timestamp column in descending order. The number of strings that can be displayed in the table is 250.

SELECT * FROM `events` WHERE BytesIn BETWEEN 1000 AND 2000 ORDER BY Timestamp ASC LIMIT 250All events of the events table for which the BytesIn field contains a value of received traffic in the range from 1,000 to 2,000 bytes are sorted by the Timestamp column in ascending order. The number of strings that can be displayed in the table is 250.

SELECT * FROM `events` WHERE Message LIKE '%ssh:%' ORDER BY Timestamp DESC LIMIT 250In the events table, all events whose Message field contains data corresponding to the defined

%ssh:%template in lowercase are sorted by the Timestamp column in descending order. The number of strings that can be displayed in the table is 250.SELECT * FROM `events` WHERE inSubnet(DeviceAddress, '00.0.0.0/00') ORDER BY Timestamp DESC LIMIT 250In the events table, all events for the hosts that are in the 00.0.0.0/00 subnet are sorted by the Timestamp column in descending order. The number of strings that can be displayed in the table is 250.

SELECT * FROM `events` WHERE match(Message, 'ssh.*') ORDER BY Timestamp DESC LIMIT 250In the events table, all events whose Message field contains text corresponding to the

ssh.*template are sorted by the Timestamp column in descending order. The number of strings that can be displayed in the table is 250.SELECT max(BytesOut) / 1024 FROM `events`Maximum amount of outbound traffic (KB) for the selected time period.

SELECT count(ID) AS "Count", SourcePort AS "Port" FROM `events` GROUP BY SourcePort ORDER BY Port ASC LIMIT 250Number of events and port number. Events are grouped by port number and sorted by the Port column in ascending order. The number of strings that can be displayed in the table is 250.

The ID column in the events table is named Count, and the SourcePort column is named Port.

If you want to use a special character in a query, you need to escape this character by placing a backslash (\) character in front of it.

Example:

In the events table, all events whose Message field contains text corresponding to the |

When creating a normalizer for events, you can choose whether to retain the field values of the raw event. The data is stored in the Extra event field. This field is searched for events by using the LIKE operator.

Example:

In the events table, all events for hosts with the IP address 00.00.00.000 where the example process is running are sorted by the Timestamp column in descending order. The number of strings that can be displayed in the table is 250. |

When switching to the query builder, the query parameters that were manually entered into the search string are not transferred to the builder so you will need to create your query again. Also, the query created in the builder does not overwrite the query that was entered into the search string until you click the Apply button in the builder window.

Aliases must not contain spaces.

For more details on SQL, refer to the ClickHouse documentation. See also the supported ClickHouse functions.

Filtering events by period

In KUMA, you can specify the time period to display events from.

To filter events by period:

- In the Events section of the KUMA web interface, open the Period drop-down list in the upper part of the window.

- If you want to filter events based on a standard period, select one of the following:

- 5 minutes

- 15 minutes

- 1 hour

- 24 hours

- In period

If you select this option, use the opened calendar to select the start and end dates of the period and click Apply Filter. The date and time format depends on your operating system's settings. You can also manually change the date values if necessary.

- Click the

button.

button.

When the period filter is set, only events registered during the specified time interval will be displayed. The period will be displayed in the upper part of the window.

You can also configure the display of events by using the events histogram that is displayed when you click the  button in the upper part of the Events section. Events are displayed if you click the relevant data series or select the relevant time period and click the Show events button.

button in the upper part of the Events section. Events are displayed if you click the relevant data series or select the relevant time period and click the Show events button.

Displaying names instead of IDs

When accessing certain event fields with IDs, KUMA returns the corresponding names rather than IDs. This helps make the information more readable. For example, if you access the TenantID event field (which stores the tenant ID), you get the value of the TenantName event field (which stores the tenant name).

When exporting events, values of both fields are written to the file, the ID as well as the name.

The table below lists the fields that are substituted when accessed:

Requested field |

Returned field |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Substitution does not occur if an alias is assigned to the field in the SQL query. Examples:

SELECT TenantID FROM `events` LIMIT 250— in the search result, the name of the tenant is displayed in the TenantID field.SELECT TenantID AS Tenant_name FROM `events` LIMIT 250— in the search result, the tenant ID will be displayed in the Tenant_name field.

Presets

You can use

to simplify work with queries if you regularly view data for a specific set of event fields. In the line with the SQL query, you can typeSelect * and select a saved preset; in that case, the output is limited only to the fields specified in the preset. This method slows down performance but eliminates the need to write a query manually every time. Presets are saved on the KUMA Core server and are available to all KUMA users of the specified tenant.

To create a preset:

- In the Events section, click the

icon.

icon. - In the window that opens, on the Event field columns tab, select the required fields.

To simplify your search, you can start typing the field name in the Search area.

- To save the selected fields, click Save current preset.

The New preset window opens.

- In the window that opens, specify the Name of the preset, and in the drop-down list, select the Tenant.

- Click Save.

The preset is created and saved.

To apply a preset:

- In the query entry field, enter Select *.

- In the Events section of the KUMA web interface, click the

icon.

icon. - In the opened window, use the Presets tab to select the relevant preset and click the

button.

button.The fields from the selected preset are added to the SQL query field, and the columns are added to the table. No changes are made in Builder.

- Click

to execute the query.

to execute the query.After the query execution completes, the columns are filled in.

Limiting the complexity of queries in alert investigation mode

When investigating an alert, the complexity of SQL queries for event filtering is limited if the Related to alert option is selected in the  drop-down list. If this is the case, only the functions and operators listed below are available for event filtering.

drop-down list. If this is the case, only the functions and operators listed below are available for event filtering.

If the All events option is selected from the  drop-down list, these limitations are not applied.

drop-down list, these limitations are not applied.

SELECT- The

*character is used as a wildcard to represent any number of characters.

- The

WHEREAND,OR,NOT,=,!=,>,>=,<,<=INBETWEENLIKEinSubnet

Examples:

WHERE Type IN ('Base', 'Correlated')WHERE BytesIn BETWEEN 1000 AND 2000WHERE Message LIKE '%ssh:%'WHERE inSubnet(DeviceAddress, '10.0.0.1/24')

ORDER BYSorting can be done by column.

OFFSETSkip the indicated number of lines before printing the query results output.

LIMITThe default value is 250.

If you are filtering events by user-defined period and the number of strings in the search results exceeds the defined value, you can click the Show next records button to display additional strings in the table. This button is not displayed when filtering events by the standard period.

When filtering by alert-related events in alert investigation mode, you cannot group the returned data. When filtering by alert-related events in alert investigation mode, you cannot perform operations on the data of event fields or assign names to the columns of displayed data.

Page topSaving and selecting events filter configuration

In KUMA, you can save a filter configuration and use it in the future. Other users can also use the saved filters if they have the appropriate access rights. When saving a filter, you are saving the configured settings of all the active filters at the same time, including the time-based filter, query builder, and the events table settings. Search queries are saved on the KUMA Core server and are available to all KUMA users of the selected tenant.

To save the current settings of the filter, query, and period:

- In the Events section of the KUMA web interface, click the

icon next to the filter expression and select Save current filter.

icon next to the filter expression and select Save current filter. - In the window that opens, enter the name of the filter configuration in the Name field. The name can contain up to 128 Unicode characters.

- In the Tenant drop-down list, select the tenant that will own the created filter.

- Click Save.

The filter configuration is now saved.

To select a previously saved filter configuration:

In the Events section of the KUMA web interface, click the  icon next to the filter expression and select the relevant filter.

icon next to the filter expression and select the relevant filter.

The selected configuration is active, which means that the search field is displaying the search query, and the upper part of the window is showing the configured settings for the period and frequency of updating the search results. Click the  button to submit the search query.

button to submit the search query.

You can click the ![]() icon near the filter configuration name to make it a default filter.

icon near the filter configuration name to make it a default filter.

Deleting event filter configurations

To delete a previously saved filter configuration:

- In the Events section of the KUMA web interface, click the

icon next to the filter search query and click the

icon next to the filter search query and click the  icon next to the configuration that you need to delete.

icon next to the configuration that you need to delete. - Click OK.

The filter configuration is now deleted for all KUMA users.

Page topSupported ClickHouse functions

The following ClickHouse functions are supported in KUMA:

- Arithmetic functions.

- Arrays—all functions except:

- has

- range

- functions in which higher-order functions must be used (lambda expressions (->))

- Comparison functions: all operators except == and less.

- Logical functions: "not" function only.

- Type conversion functions.

- Date/time functions: all except date_add and date_sub.

- String functions.

- String search functions—all functions except:

- position

- multiSearchAllPositions, multiSearchAllPositionsUTF8, multiSearchFirstPosition, multiSearchFirstIndex, multiSearchAny

- like and ilike

- Conditional functions: simple if operator only (ternary if and miltif operators are not supported).

- Mathematical functions.

- Rounding functions.

- Functions for splitting and merging strings and arrays.

- Bit functions.

- Functions for working with UUIDs.

- Functions for working with URLs.

- Functions for working with IP addresses.

- Functions for working with Nullable arguments.

- Functions for working with geographic coordinates.

In KUMA 2.1.3, errors with the operation of the DISTINCT operator have been fixed. In this case, you must use the following notation: SELECT DISTINCT SourceAddress as Addresses FROM <rest of the query>.

In KUMA 2.1.1, the SELECT DISTINCT SourceAddress / SELECT DISTINCT(SourceAddress) / SELECT DISTINCT ON (SourceAddress) operators work incorrectly.

Search and replace functions in strings, and functions from other sections are not supported.

For more details on SQL, refer to the ClickHouse documentation.

Page topViewing event detail areas

To view information about an event:

- In the program web interface window, select the Events section.

- Search for events by using the query builder or by entering a query in the search field.

The event table is displayed.

- Select the event whose information you want to view.

The event details window opens.

The Event details area appears in the right part of the web interface window and contains a list of the event's parameters with values. In this area you can:

- Include the selected field in the search or exclude it from the search by clicking

or

or  next to the setting value.

next to the setting value. - Clicking a file hash in the FileHash field opens a list in which you can select one of the following actions:

- Show info from Threat Lookup.

This is available when integrated with Kaspersky Threat Intelligence Portal.

- Add to Internal TI of CyberTrace.

- This is available when integrated with Kaspersky CyberTrace.

- Show info from Threat Lookup.

- Open a window containing information about the asset if it is mentioned in the event fields and registered in the program.

- You can click the link containing the collector name in the Service field to view the settings of the service that registered the event.

You can also link an event to an alert if the program is in alert investigation mode and open the Correlation event details window if the selected event is a correlation event.

In the Event details area, the name of the described object is shown instead of its ID in the values of the following settings. At the same time, if you change the filtering of events by this setting (for example, by clicking  ) to exclude events with a certain setting-value combination from search results), the object's ID, and not its name, is added to the SQL query:

) to exclude events with a certain setting-value combination from search results), the object's ID, and not its name, is added to the SQL query:

- TenantID

- SeriviceID

- DeviceAssetID

- SourceAssetID

- DestinationAssetID

- SourceAccountID

- DestinationAccountID

Exporting events

In KUMA, you can export information about events to a TSV file. The selection of events that will be exported to a TSV file depends on filter settings. The information is exported from the columns that are currently displayed in the events table. The columns in the exported file are populated with the available data even if they did not display in the events table in the KUMA web interface due to the special features of the SQL query.

To export information about events:

- In the Events section of the KUMA web interface, open the

drop-down list and choose Export TSV.

drop-down list and choose Export TSV.The new export TSV file task is created in the Task manager section.

- Find the task you created in the Task manager section.

When the file is ready to download, the

icon will appear in the Status column of the task.

icon will appear in the Status column of the task. - Click the task type name and select Upload from the drop-down list.

The TSV file will be downloaded using your browser's settings. By default, the file name is event-export-<date>_<time>.tsv.

The file is saved based on your web browser's settings.

Page topConfiguring the table of events

Responses to user SQL queries are presented as a table in the Events section. The fields selected in the custom query appear at the end of the table, after the default columns. This table can be updated.

The following columns are displayed in the events table by default:

- Tenant.

- Timestamp.

- Name.

- DeviceProduct.

- DeviceVendor.

- DestinationAddress.

- DestinationUserName.

In KUMA, you can customize the displayed set of event fields and their display order. The selected configuration can be saved.

When using SQL queries with data grouping and aggregation for filtering events, statistics are not available and the order of displayed columns depends on the specific SQL query.

In the events table, in the event details area, in the alert window, and in the widgets, the names of assets, accounts, and services are displayed instead of the IDs as the values of the SourceAssetID, DestinationAssetID, DeviceAssetID, SourceAccountID, DestinationAccountID, and ServiceID fields. When exporting events to a file, the IDs are saved, but columns with names are added to the file. The IDs are also displayed when you point the mouse over the names of assets, accounts, or services.

Searching for fields with IDs is only possible using IDs.

To configure the fields displayed in the events table:

- Click the

icon in the top right corner of the events table.

icon in the top right corner of the events table.You will see a window for selecting the event fields that should be displayed in the events table.

- Select the check boxes opposite the fields that you want to view in the table. You can search for relevant fields by using the Search field.